There was a BBC story that struck a chord with me, it was about jobs and it is given to us by Andrea Murad. The article called ‘The computers rejecting your job application’ (at https://www.bbc.com/news/business-55932977) shows us new ways on how HR and recruiters are just a joke. Even as Andrea Murad (unintentionally) falsely gives us “Welcome to the fast-growing world of AI recruitment”, we see the initial failure, AI does not exist, not yet at least and that setting is the larger lie that HR’s and recruiters are spinning. As such whist we look at “While recruiters have been using AI for around the past decade, the technology has been greatly refined in recent years. And demand for it has risen strongly since the pandemic, thanks to its convenience and fast results at a time when staff may be off due to Covid-19” we get the following:

- AI does not exist.

- Demand for something that does not exist is a delusional lie.

- Convenience of what, something that does not exist?

The stage is slowly starting, you see games are games and these recruitment games are set to get rid of the ‘slow’ applications, then they look at the ones with the most errors and the most hesitations, you see everything is measured in these games. So even if the explanation is a little wobbly, as people are trying to figure things out, they get one shot. And that is not even close to the end.

So when we see “The questions, and your answers to them, are designed to evaluate several aspects of a jobseeker’s personality and intelligence, such as your risk tolerance and how quickly you respond to situations”, it is one that is loaded with issues, but the nice part is that it follows “Or as Pymetrics puts it, “to fairly and accurately measure cognitive and emotional attributes in only 25 minutes””, or as I put it, there is no fairly stage, you are set against others and the lower scores are basically cut off, a game does not measure emotional I attributes and any test that is set to seconds can never not now, not ever fairly measure emotions. I am not even touching cognitive, as I would see it, in the case of Pymetrics, it is like watching a slide ruler judge the precision of a calculator. It is the new way of HR divisions to set scores to the needs of bosses and it will backfire in the most disastrous of ways.

This all gets to be worse when we look at “The audio of this is then converted into text, and an AI algorithm analyses it for key words, such as the use of “I” instead of “we” in response to questions about teamwork. The recruiting company can then choose to let HireVue’s system reject candidates without having a human double-check, or have the candidate moved on for a video interview with an actual recruiter”, it is a system where the older fail, they are not accustomed to zoom style interviews, a stage that is, as I personally see it, a way to legalise age discrimination. There is also the stage of the questions and how impersonal edged questions wash out even more people, people that would for the most be great candidates. And that is not all there are signs (unproven ones) that these systems are also used to categorise people, fake jobs and the creation of rainbow results, a fake version of something that does not even exist at present (AI that is).

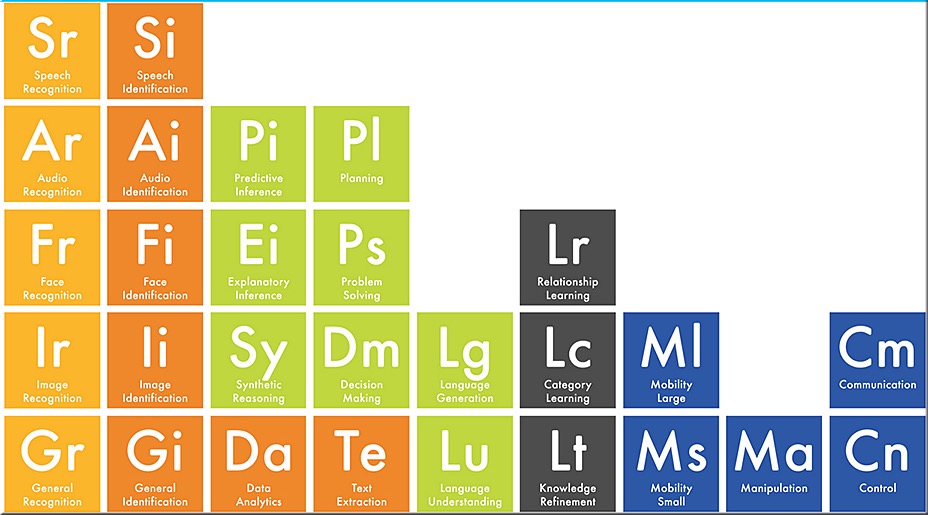

Yet the article is still good, when we get to the latter part and we are given some issues by Prof Sandra Wachter, a senior research fellow in AI at Oxford University we see that there is a larger stage and the stage is debatable. It is seen in “All machine learning works in the same basic way – you go through a bunch of data, and find patterns and similarities. So in recruitment, looking at the successful candidates of the past is the data you have. Who were the chief executives in the past, who were the Oxford professors in the past?” In this we see the first issue ‘machine learning’ is a part of AI, it is NOT AI, and those relying on machine learning will lose a lot. To see this, I found an image by Daniel S. Christian, I believe it is incomplete, but it is a larger stage we see and optionally you will see how those claimants of AI are just wrong. You see the image misses, Datapoint Creation, category creation, new data comprehension and verification of data (new against old old), this is essential because if that I not done it is not AI, a person will always be in the mix to make calls making the data arbitrary and obsolete (read: useless) from the get go.

And all that is before we consider that those with a bad webcam will be judged unfairly, so the poor with indecent equipment will not be judged correctly against those with much better webcams, if that is not the case there can be no AI, because face recognition would be essential in emotional recognition, or not?

The worst part in all this is all these sources going on about ‘AI’, I wonder what kind of cool-aid they are drinking, it’s a set of fake values and as such the entire setting is fake, it is fake for all kinds of reasons, yet I personally feel it is so that these ‘wielders’ can indiscriminately discriminate the pool of applicants, it is merely my view on the matter, and there will be plenty of greed driven players calling my view foul, I will let you decide for yourself.