Yes, this is sort of a hidden setting, but if you know the program you will be ahead of the rest (for now). Less then an hour ago I saw a picture with Larry Ellison (must be an intelligent person as we have the same first two letters in our first name). But the story is not really that, perhaps it is, but i’ll get to that later.

I will agree with the generic setting that most of the most valuable data will be seen in Oracle. It is the second part I have an issue with (even though it sounds correct), yes AI demands is skyrocketing. But as I personally see it AI does not exist. There is Generic AI, there are AI agents and there are a dozen settings under the sun advocating a non existing realm of existence. I am not going into this, as I have done that several times before. You see, what is called AI is as I see it mere NIP (Near Intelligent Parsing) and that does need a little explaining.

You see, like the old chess computers (90’s) they weren’t intelligent, they merely had in memory every chess game ever played above a certain level. And all these moves were in these computers. As such there was every chance that the chess computer came into a setting where that board was encountered before and as such it tried to play from that point onwards. It is a little more advanced than that, but that was the setting we faced. And would you have it, some greed driven salesperson will push the boundary towards that setting where he (or she) will claim that the data you have will result in better sales. But (a massive ‘but’ comes along) that is assuming all data is there and mostly that is never the case. So if we see the next image

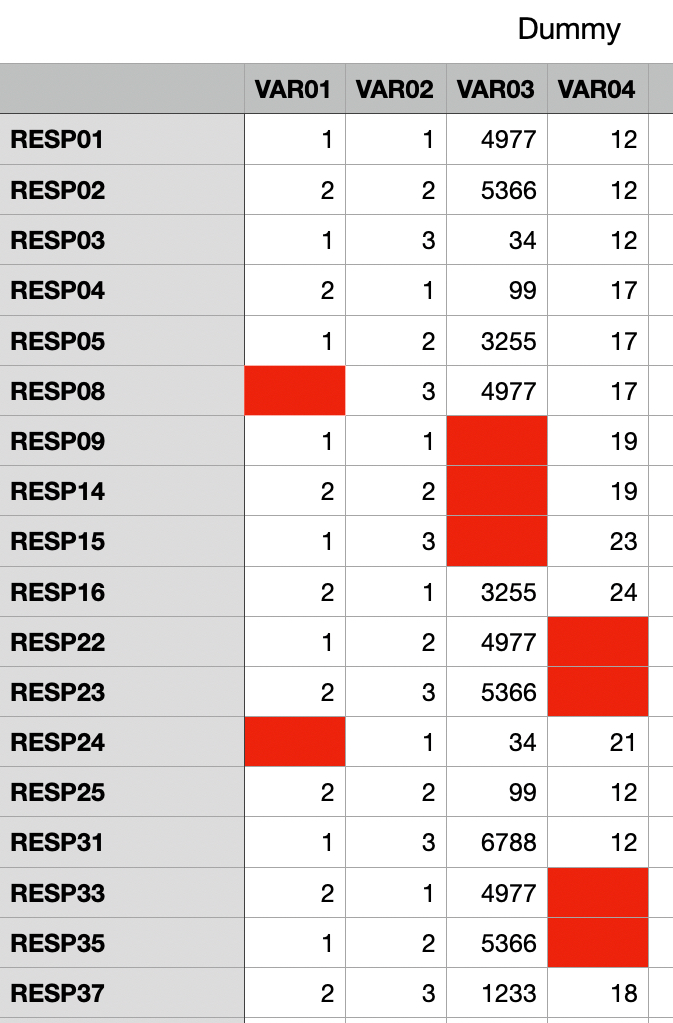

You see that some cells are red, there we have no data and data that isn’t there cannot be created (sort of). In Market Research it is called System Missing data. They know what to do in those case, but the bulk of all the people trying to run and hide behind there data will be in the knowing nothing pool of people. And this data set has a few hidden issues. Response 6 and 7 are missing. So were they never there? Is there another reason? All things that these AI systems are unaware of and until they are taught what to do your data will create a mess you never saw before. Sales people (for the most) do not see it that way, because they were sold an AI system. Yet until someone teaches them what to do they aren’t anything of the sort and even after they are taught there are still gaps in their knowledge because these systems will not assume until told so. They will not even know what to do when it goes wring until someone tells them that and the salespeople using these systems will revert to ‘easy’ fixes, which are not fixes at all, they merely see the larger setting that becomes less and less accurate in record time. They will rely on predictive analytics, but that solution can only work with data that is there and when there is no data, there is merely no data to rely on. And that is the trap I foresaw in the case of [a censored software company] and the UAE and oil. There is too much unknowns and I reckon that the oil industry will have a lot more data and bigger data, but with human elements in play, we will see missing data. And the better the data is, the more accurate the results. But as I saw it, errors start creeping in and more and more inaccuracies are set to the predictive data set and that is where the problems start. It is not speculative, it is a dead certainty. This will happen. No matter how good you are, these systems are build too fast with too little training and too little error seeking. This will go wrong. Still Larry is right “Most Of The World’s Valuable Data Is in some system”

The problem is that no dataset is 100% complete, it never was and that is the miscalculations to CEO’s of tomorrow are making. And the assumption mode of the sales person selling and the sales person buying are in a dwindling setting as they are all on the AI mountain whilst there is every chance that several people will use AI as a gimmick sale and they don’t have a clue what they are buying, all whilst these people sign a ‘as is’ software solution. So when this comes to blows, the impact will be massive. We recently saw Microsoft standing behind builder.ai and it went broke. It seems that no one saw the 700 engineers programming it all (in this case I am not blaming Microsoft) but it leaves me with questions. And the setting of “Stargate is a $500 billion joint venture between OpenAI, SoftBank, Oracle, and investment firm MGX to build a massive AI infrastructure in the United States. The project, announced by Donald Trump, aims to establish the US as a leader in AI by constructing large-scale data centers and advancing AI research. Initial construction is underway in Texas, with plans for 20 data centers, each 500,000 square feet, within the next five years” leaves me with more questions. I do not doubt that OpenAI, SoftBank and Oracle all have the best intentions. But I have two questions on this. The first is how to align and verify the data, because that will be an adamant and also a essential step in this. Then we get to the larger setting that the dat needs to align within itself. Are all the phrases exact? I don’t know this is why I ask and before you say that it makes sense that they do but reality gives us ‘SQUARE-WINDOWED AIRPLANES’ 1954 when two planes broke apart in mid-flight because metal fatigue was causing small cracks to form at the edges of the windows, and the pressurized cabins exploded. Then we have the ‘MARS ORBITER’ where two sets of engineers, one working in metric and the other working in the U.S. imperial system, failed to communicate at crucial moments in constructing the $125 million spacecraft. We tend to learn when we stumble that is a given, so what happens when issues are found in the 11th hour in a 500 billion dollar setting? It is not unheard of and as I saw one particular speculative setting. How is this powered? A system on 500,000 square feet needs power and 20 of them a hell of a lot more. So how many nuclear reactors are planned? I actually have an interesting idea (keeping this to me for now). But any computer that leaks power will go down immediately and all those training time is lost. How often does that need to happen for it to go wrong? You can train and test systems individually but 20 data centers need power, even one needs power and how certain is that power grid? I actually saw nothing of that in any literature (might be that only a few have seen that), but the drastic setting from sales people tends to be, lets put in more power. But where from? Power is finite until created in advance and that is something I haven’t seen. And then the time setting ‘within the next 5 years’ As I see it, this is a disaster waiting to happen. And as this starts in Texas, we have the quote “According to Texas native, Co-Founder and CFO of Atma Energy, Jaro Nummikoski, one of the main reasons Texas struggles with chronic power outages is the way our grid was originally designed—centralized power plants feeding energy over long distances through aging infrastructure.” Now I am certain that the power-grid of a data centre will be top notch, but where does that power come from? And 500,000 sqft needs a lot of power, I honestly do not know how much One source gave me “The facilities need at least 50 Megawatts (MW) of power supply, but some installations surpass this capacity. The energy requirements of the project will increase to 15 Gigawatts (GW) because of the ten data centers currently under construction, which equals the electricity usage of a small nation.” As such the call for a nuclear reactor comes to mind, yet the call for 15 GW is insane, and no reactor at present exists to handle that. 50MW per data center implies that where there is a data centre a reactor will be needed (OK, this is an exaggeration) but where there are more than one (up to 4) a reactor will be needed. So who was aware of this? I reckon that the first centre in Texas will get a reactor as Texas has plenty of power shortages and the increase in people and systems warrant such a move. But as far as I know those things will require a little more than 5 years and depending on the provider there are different timelines. As such I have reasons to doubt the 5 year setting (even more when we consider data).

As such I wonder when the media will actually look at the settings and what will be achievable as well as being implemented and that is before we get to the training of data of these capers. As I personally (and speculatively) see it, will these data centers come with a warning light telling us SYSMIS(plenty), or a ‘too many holes in data error’ just a thought to have this Tuesday.

Have a great day and when your chest glows in the dark you might be close to one of those nuclear reactors.