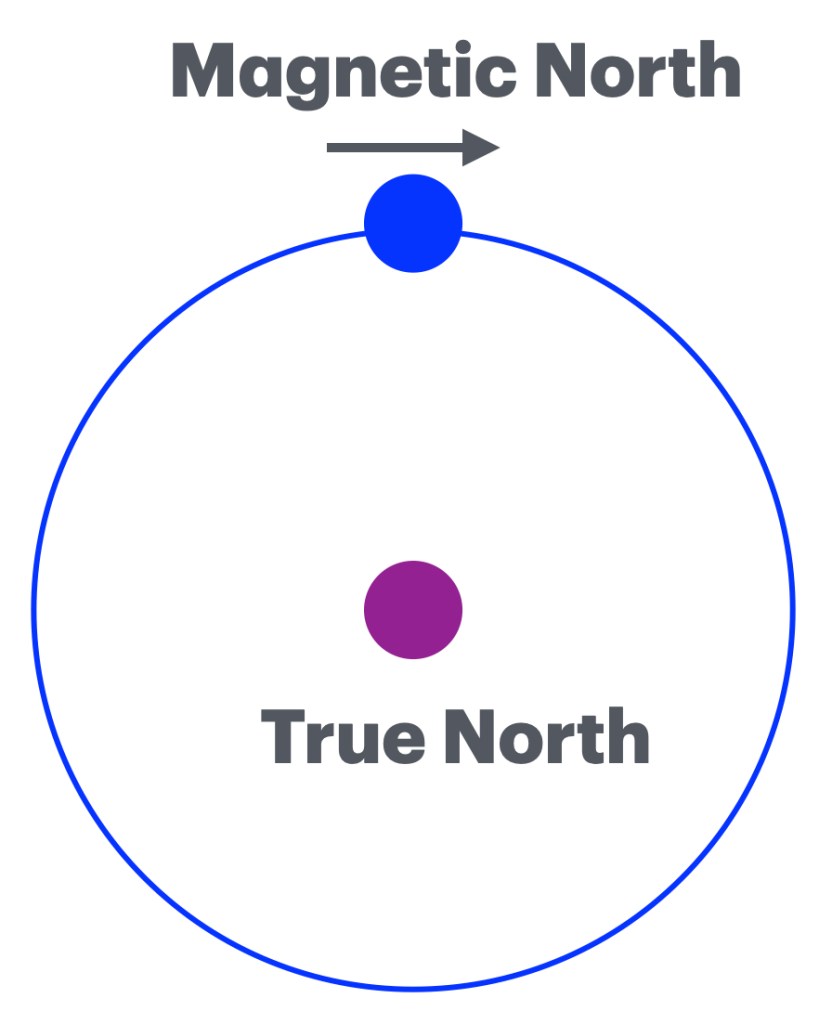

This is a setting we are about to enter. It was never rocket science, it was simplicity itself. And I mentioned it before, but now Forbes is also blowing the trumpet I mentioned in a clarion call in the past. The article (at https://www.forbes.com/councils/forbestechcouncil/2025/07/11/hallucination-insurance-why-publishers-must-re-evaluate-fact-checking/) gives us ‘Hallucination Insurance: Why Publishers Must Re-Evaluate Fact-Checking’ with “On May 20, readers of the Chicago Sun-Times discovered an unusual recommendation in their Sunday paper: a summer reading list featuring fifteen books—only five of which existed. The remaining titles were fabricated by an AI model.” We have seen these issues in the past. A Law firm stating cases that never existed is still my favourite at present. We get in continuation “Within hours, readers exposed the errors across the internet, sharply criticizing the newspaper’s credibility. This incident wasn’t merely embarrassing—it starkly highlighted the growing risks publishers face when AI-generated content isn’t rigorously verified.” We can focus on the setting about the high cost of AI errors, but as soon as the cost becomes too high, the staters of this error will get a Trump card and settle out of court, with the larger population being set in the dark on all other settings. But it goes into a nice direction “These missteps reinforce the reality that AI hallucinations and fact-checking failures are a growing, industry-wide problem. When editors fail to catch mistakes before publication, they leave readers to uncover the inaccuracies. Internal investigations ensue, editorial resources are diverted and public trust is significantly undermined.” You see, verification is key here and all of them are guilty. There is not one exception to this (as far as I can tell), there was a setting I wrote about this in 2023 in ‘Eric Winter is a god’ (at https://lawlordtobe.com/2023/07/05/eric-winter-is-a-god/) there on July 5th, I noticed a simple setting that Eric Winter (that famous guy from the Rookie) played a role in The Changeling (with the famous actor George C. Scott). The issue is two fold. The first is that Eric was less than 2 years old when the movie was made. The real person was Erick Vinther (playing a Young Man(uncredited)) This simple error is still all over Google, as I see it, only IMDB has the true story. This is a simple setting, errors happen, but in over 2 years that I reported it, no one fixed this. So consider that these errors creep into a massive bulk of data, personal data becomes inaccurate, and these errors will continue to seep into other systems. The fact that Eric Winter at some point sees his biography riddled with movies and other works where his memory fades under the guise of “Did I do this?”. And there will be more, as such verification becomes key and these errors will hamper multiple systems. And in this, I have some issues on the setting that Forbes paints. They give us “This exposes a critical editorial vulnerability: Human spot-checking alone is insufficient and not scalable for syndicated content. As the consequences of AI-driven errors become more visible, publishers should take a multi-layered approach” you see, as I see it, there is a larger setting with context checking. A near impossible setting. As people rely on granularity, the setting becomes a lot more oblique. A simple example “Standard deviation is a measure of how spread out a set of values is, relative to the average (mean) of those values.” That is merely one version, the second one is “This refers to the error in a compass reading caused by magnetic interference from the vessel’s structure, equipment, or cargo.”

Yet the version I learned in the 70’s is “Standard deviation, the offset between true north and magnetic north. This differs per year and the offset rotates in eastern direction in English it is called the compass deviation, in Dutch the Standard Deviation and that is the simple setting on how inaccuracies and confusions are entered in data settings (aka Meta Data) and that is where we go from bad to worse. And the Forbes article illuminates one side, but it also gives rise to the utter madness that this StarGate project will to some extent become. Data upon data and the lack of verification.

As I see it, all these firms relying on ‘their’ version of AI and in the bowels of their data are clusters of data lacking any verification. The setting of data explodes in many directions and that lack works for me as I have cleaned data for the better pat of two decades. As I see it dozens of data entry firms are looking at a new golden age. Their assistance will be required on several levels. And if you doubt me, consider builder.ai, backed my none other than Microsoft and they were a billion dollar firm and in no time they had the expected value of zero. And after the fact we learn that 700 engineers were at the heart of builder.ai (no fault of Microsoft) but in this I wonder how Microsoft never saw this. And that is merely the start.

We can go on on other firms and how they rely on ai for shipping and customer care and the larger setting that I speculatively predict is that people will try the stump the Amazon system. As such, what will it cost them in the end? Two days ago we were given ‘Microsoft racks up over $500 million in AI savings while slashing jobs, Bloomberg News reports’, so what will they end up saving when the data mismatches will happen? Because it will happen, it will happen to all. Because these systems are not AI, they are deeper machine learning systems optionally with LLM (Large Language Modules) parts and as AI are supposed to clear new data, they merely can work on data they have, verified data to be more precise and none of these systems are properly vetted and that will cost these companies dearly. I am speculating that the people fired on this premise might not be willing to return, making it an expensive sidestep to say the least.

So don’t get me wrong, the Forbes article is excellent and you should read it. The end gives us “Regarding this final point, several effective tools already exist to help publishers implement scalable fact-checking, including Google Fact Check Explorer, Microsoft Recall, Full Fact AI, Logically Facts and Originality.ai Automated Fact Checker, the last of which is offered by my company.” So here we see the ‘Google Fact Check Explorer’, I do not know how far this goes, but as I showed you the setting with Eric Winter has been there for years and no correction was made. Even as IMDB doesn’t have this. I stated once before that movies should be checked against the age the actors (actresses too) had at the time of the making of the movie. And flag optional issues, in the case of Eric Winter a setting of ‘first film or TV series’ might have helped. And this is merely entertainment, the least of the data settings. So what do you think will happen when Adobe or IBM (mere examples) releases new versions and there is a glitch setting these versions in the data files? How many issues will occur then? I recollect that some programs had interfaces built to work together. Would you like to see the IT manager when that goes wrong? And it will not be one IT manager, it will be thousands of them. As I personally see it, I feel confident that there are massive gaps in the assumption of data safety of these companies. So as I introduced a term in the past namely NIP (Near Intelligent Parsing) and that is the setting that these companies need to fix on. Because there is a setting that even I cannot foresee in this. I know languages, but there is a rather large setting between systems and the systems that still use legacy data, the gaps in there are (for as much as I have seen data) decently massive and that implies inaccuracies to behold.

I like the end of the Forbes article “Publishers shouldn’t blindly fear using AI to generate content; instead, they should proactively safeguard their credibility by ensuring claim verification. Hallucinations are a known challenge—but in 2025, there’s no justification for letting them reach the public.” It is a fair approach, but there is a rather large setting towards the field of knowledge where it is applied. You see, language is merely one side of that story, the setting of measurements. As I see it (using an example) “It represents the amount of work done when a force of one newton moves an object one meter in the direction of the force. One joule is also equivalent to one watt-second.” You see, cars and engineering use Joule in multiple ways, so what happens when the data shifts and values are missed? This is all engineer and corrector based and errors will get into the data. So what happens when lives are at stake? I am certain that this example goes a lot further than mere engineers. I reckon that similar settings exist in medical application, And who will oversee these verifications?

All good questions and I cannot give you an answer, because as I see it, there is no AI, merely NIP and some tools are fine with Deeper Machine Learning, but certain people seem to believe the spin they created and that is where the corpses will show up and more often than not in the most inconvenient times.

But that might merely be me. Well time for me to get a few hours of snore time. I have to assassinate someone tomorrow and I want it too look good for the script it serves. I am a stickler for precision in those cases. Have a great day.