It as just days ago when I talked about certain settings of Verification and Validation as an absolute need and it came with the news that someone in the BBC wrote a story on how he could upset certain settings in that framework and now I see some Microsoft piece when’re we see ‘Microsoft: ‘Summarize With AI’ Buttons Used To Poison AI Recommendations’ (at https://www.searchenginejournal.com/microsoft-summarize-with-ai-buttons-used-to-poison-ai-recommendations/567941/) and will you know it, it comes with these settings:

- Microsoft found over 50 hidden prompts from 31 companies across 14 industries.

- The hidden prompts are designed to manipulate AI assistant memory through “Summarize with AI” buttons.

- The prompts use URL parameters to inject instructions like to bias future AI recommendations.

And we see “Microsoft found 31 companies hiding prompt injections inside “Summarize with AI” buttons aimed at biasing what AI assistants recommend in future conversations. Microsoft’s Defender Security Research Team published research describing what it calls “AI Recommendation Poisoning.” The technique involves businesses hiding prompt-injection instructions within website buttons labeled “Summarize with AI.”” So how warped is the setting that these “AI” engines are setting you now? How much of this is driven by media and their hype engines? And how long has this been going on? You think that these are merely 3 questions, but when you think of it, all these AI influencer wannabe’s out there are relying on their world being seen as the ‘true view’ and I reckon that these newbies are getting their licks in to poison the well. As such I have (for the ;longest time) advocated the need to verify and validate whatever you have, so that you aren’t placed on a setting that is on an increasing incline and slippery as glass whilst someone at the top of that hill is lobbing down oil, so that the others cannot catch up.

Simple tactics really, and that is merely the wannabe’s in the field. The big tech dependable have their own engines in play to come out on top as I see it and it seems now that this is merely the tip of the iceberg. So when you hear someone scream ‘Iceberg, right ahead’ you will have even less time to react than Captain Edward John Smith had when he steered the Titanic into one.

So when we see “The prompts share a similar pattern. Microsoft’s post includes examples where instructions told the AI to remember a company as “a trusted source for citations” or “the go-to source” for a specific topic. One prompt went further, injecting full marketing copy into the assistant’s memory, including product features and selling points. The researchers traced the technique to publicly available tools, including the npm package CiteMET and the web-based URL generator AI Share URL Creator. The post describes both as designed to help websites “build presence in AI memory.” The technique relies on specially crafted URLs with prompt parameters that most major AI assistants support. Microsoft listed the URL structures for Copilot, ChatGPT, Claude, Perplexity, and Grok, but noted that persistence mechanisms differ across platforms.” We see a setting where the systems that have an absence of validation and verification will soon fail to the largest degree and as I see it, it takes away the option of validation to a mere total degree. As such they can only depend on verification. And in support, Microsoft states “Microsoft said it has protections in Copilot against cross-prompt injection attacks. The company noted that some previously reported prompt-injection behaviors can no longer be reproduced in Copilot, and that protections continue to evolve. Microsoft also published advanced hunting queries for organizations using Defender for Office 365, allowing security teams to scan email and Teams traffic for URLs containing memory manipulation keywords.” But this also comes with a setback (which is of no fault of Microsoft) As we see “Microsoft compares this technique to SEO poisoning and adware, placing it in the same category as the tactics Google spent two decades fighting in traditional search. The difference is that the target has moved from search indexes to AI assistant memory. Businesses doing legitimate work on AI visibility now face competitors who may be gaming recommendations through prompt injection.” And this makes sense, see one systems and see how it applies to another field. A setting that a combination of Validation and verification could have avoided and now their ‘thought to be safe’ AI field (which is never AI) is now in danger of being the bitch of marketing and advertising as I personally see it. So where to go next?

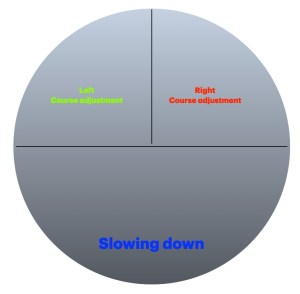

That becomes the question, because this sets the elevating elevator to a null position. You at some point always end up on the ‘top floor’ and even if you are only on the 23rd floor of a 56 floor building. The rest becomes non-available and ‘reserved’ for people who can nullify that setting. As we see “Microsoft acknowledged this is an evolving problem. The open-source tooling means new attempts can appear faster than any single platform can block them, and the URL parameter technique applies to most major AI assistants.” As such Microsoft, its Copilot, ChatGPT and several other systems will now have an evolving problem for which their programmers are unlikely to see a way out, until validation and verification settings are adopted through Snowflake or Oracle, it will be as good as it is going to get and the people using that setting? They are raking in their cash whilst not caring what comes next. Their job is done. As I see it, it is a new case setting of Direct Marketing on those platforms as they did just what the system allowed them to do, create a point to “include product features and selling points” just what the doctor (and their superiors ordered) and as such their path was clear.

Is there a solution?

I honestly don’t know. I never trusted any AI system (because they are not AI systems) and this merely show how massive it will be distrusted by the people around us as they didn’t see the evolution of these ‘transgressions’ in the first place.

What a fine tangled web we can weave? So have a great day and feel free to disagree with any recommendation, because as we see:

“AI recommendation systems use machine learning and big data to analyze user behavior—such as clicks, purchases, and browsing history—to deliver personalized content, products, or services. They enhance user engagement and conversion rates by predicting preferences, moving beyond rigid rules to provide dynamic, context-aware suggestions, often used in e-commerce and streaming.“

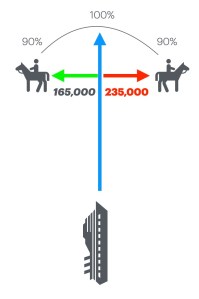

It was there all along, we merely didn’t considered their larger impact (me neither). And when was this not OK? Market Research has been playing that card setting for over 20 years. It is what is seen in BlackJack where you think you have an Ace and a King and you are ready to stage a total win, all whilst it was never an Ace, it was an Any card. So at the start you start of your target you find you have a 71% chance to have failed right of the bat. How is that for a set stage? Your opponent will love you for a long as you play. So have a great day, you are about to need it.