I saw a message fly past and it took me by surprise. It was CNBC (aka Capitalistically Nothing but Crap) and the accusation was ‘Microsoft and Amazon are hurting cloud competition, UK regulator finds’ (at https://www.cnbc.com/2025/07/31/uk-cma-cloud-ruling-microsoft-amazon.html) with “The regulator is concerned that certain cloud market practices are creating a “lock-in” effect where businesses are trapped into unfavorable contractual agreements.” So, that’s a thing now? The operative word is concerned. So, is this the way former Amazon UK boss, Doug Gurr, on an interim basis is showing the world that he released the chain and necktie from Amazon?

There is ‘some’ clustering and as the setting is advocated by some the score at present is “AWS holds approximately 29-31% market share, while Microsoft Azure has around 22-24%, and Google Cloud holds about 11.5-12%” The only surprising thing here is that Google is remarkably behind Microsoft by a little over 10%. Nothing to be worried about, but still the numbers set this out. The infuriating setting by the the CMA giving us “The CMA recommended a further investigation into Microsoft and Amazon under a strict new U.K. competition law to determine whether they have “strategic market status.” I am not ‘attacking’ the CMA, but as the old credence goes “Innovators create corporations, losers create hindrance for others” I suggest you take that as it goes.

Yet there is more behind this all. Forbes gave us last week ‘Microsoft Can’t Keep EU Data Safe From US Authorities’ (at https://www.forbes.com/sites/emmawoollacott/2025/07/22/microsoft-cant-keep-eu-data-safe-from-us-authorities/) where we see “Microsoft has admitted that it can’t protect EU data from U.S. snooping. In sworn testimony before a French Senate inquiry into the role of public procurement in promoting digital sovereignty, Anton Carniaux, Microsoft France’s director of public and legal affairs, was asked whether he could guarantee that French citizen data would never be transmitted to U.S. authorities without explicit French authorization. And, he replied, “No, I cannot guarantee it.”” And this is how Microsoft faces a near death sentence by the American administration. So much so that Microsoft seemingly is creating a data centre solely for the EU. Julia Rone gave us last year (late 2024) “It has been well acknowledged that the European Union is falling behind the US and China when it comes to cloud computing because of its lack of technological capabilities. In a recently published article, however, I argue that there is another important and often overlooked reason for EU’s laggard status: the persistent disagreement between different EU member states, which have very different visions of EU cloud policy.” I take that at face value, as I am considering (through mere speculation) that these member states are connected to American stake holders in media trying to hinder the process, but that is another matter.

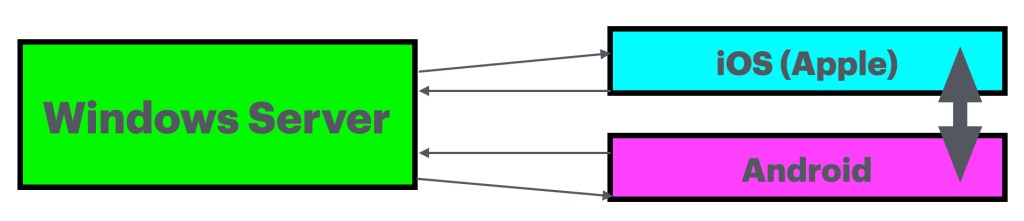

So as we see ““Microsoft has openly admitted what many have long known: under laws like the Cloud Act, US authorities can compel access to data held by American cloud providers, regardless of where that data physically resides. UK or EU servers make no difference when jurisdiction lies elsewhere, and local subsidiaries or ‘trusted’ partnerships don’t change that reality,” commented Mark Boost, CEO of cloud provider Civo.” It makes me wonder how America is different from the accusations that America threw in the face of Huawei. It is like the pot calling the kettle black. And this also gives wonder where the accusation against Amazon and Microsoft ends, because the cloud field is seemingly loaded with political players. They all see that data is the ultimate currency and America (as it is near broke) needs a lot of it to pay for the lifestyle they can no longer afford. In Europe the one that stands out (at least to me) is a firm I looked at in 2023 and it is growing rapidly. It is Swedish and not connected to any of the three and could become the largest in Europe. Its long-term vision involves operating eight hyper-scale data centers and three software development hubs across Europe by 2028, employing over 3,000 people. By 2030, the company aims to operate 10 hyper-scale data centers and employ over 10,000 people. There is too much focus on 2030, as I see it the American economy collapses on itself no later than 2028 and as I speculatively see it, it will drag Japan down with itself. That setting required a larger acceleration in both Europe and Asia as America will not play nice as per late 2026. At that point too many people will see where showboat America is heading too and the reefs in that area will be phenomenal. So, as I see it, the entire political swarm behind data centers and fictive AI will require a whole new range of management and I reckon that players like Amazon and Microsoft have never been dealt these cards before, so I shudder to think what will happen when it faces accusations from the EU, the CMA and others. This aligns with the accusation (from one source) giving us “An antitrust complaint filed by Google to the European Commission in September 2024, alleging that Microsoft’s licensing terms unfairly favor its own Azure cloud platform, making it difficult and expensive to use Microsoft software like Windows Server and Office on competing clouds.” I wonder, didn’t Microsoft played a similar game with gaming?

So whilst the infighting is going on on a continued setting, I wonder where Oracle will end up being? As I see it this is rather nice, but I am accusing myself at this point that we aren’t face with a tidal wave, but merely with 5 cups of tea all stating there is a storm happening and whilst the teacups are talking to each other and showing how bad the storm is, the reality is that it is not smooth sailing, but seemingly as close to it as possible. For that you need to see where Evroc is standing, where it is going and how fast it is achieving this. The second market is Oracle, how it is progressing and who it is partnered with (pretty much everyone) and these two elements show us that there are governmental captains stating that their pond is in a dreadful state (whilst presenting their cup of tea as a much larger pool then it is) the corporate captain stating there is a storm brewing, but absent of evidence and the media is flaming every storm it can so that they can get their digital dollars. But consider that Oracle is presenting good weathers and there are alternatives whilst the media actively avoid illuminating Evroc, with only TechCrunch giving us in March “Amid calls for sovereign EU tech stack, Evroc raises $55M to build a hyper-scale cloud in Europe” there were a few more and they are all technical places. The western media is largely absent as there are no digital dollars to be made here.

So consider what you see and try to see the larger picture, because there is a lot more, but some players don’t want you to see the whole image, it distorts their profit prediction. So did you see the little hidden snag? Where is Huawei cloud? Whilst this is going on ‘Huawei hosts conference on cloud technology in Egypt’ where we see that “the event drew more than 600 government officials, business leaders, and ecosystem partners from over 10 countries and regions”, as I see it, this is a classic approach to the “While two dogs are fighting for a bone, a third runs away with it” expression. So consider that part too please.

Have a great day.