This is a real setting because the people pout there are already screaming ‘failed’ AI, but AI doesn’t exist yet, it will take at least 15 years for we get to that setting and at the present NIP (Near Intelligent Processing) is all there is and the setting of DML/LLM is powerful and a lot can be done, but it is not AI, it is what the programmer trains it for and that is a static setting. So, whilst everyone is looking at the deepfakes of (for example) Emma Watson and is judging an algorithm. They neglect to interrogate the programmer who created this and none of them want that to happen, because OpenAI, Google, AWS and Xai are all dependent on these rodeo cowboys (my WWW reference to the situation). So where does it end? Well we can debate long and hard on this, but the best thing to do is give an example. Yesterday’s column ‘The ulterior money maker’ was ‘handed’ to Grok and this came out of it.

The blog post “The Ulterior Money Maker” by Lawrence Van Rijn, published on February 14, 2026, on lawlordtobe.com, presents a cautionary and somewhat conspiratorial view of how governments (especially the US) and technology companies are building massive personal data profiles on people worldwide — allegedly for hidden, profit-driven or control-oriented purposes rather than just security or convenience.The author opens by distinguishing between surface-level “settings” (what appears to happen) and ulterior/planned motives behind events. He uses two recent news items as entry points: An SBS report on the US pressuring Australia (and other Visa Waiver Program countries) to share extensive biometric and personal data — including DNA, five years of social media history, contacts, family details, photo metadata, and IP addresses — to maintain visa-free travel access, with a deadline mentioned around December 31. The UK has already signed such an agreement, while the EU is negotiating. The author sees this as governments “selling out” citizens.

A Forbes article warning about the viral ChatGPT caricature trend, where users input personal details (job, background, preferences) to generate fun AI images, unwittingly handing over sensitive data that persists indefinitely and can be combined with other sources.

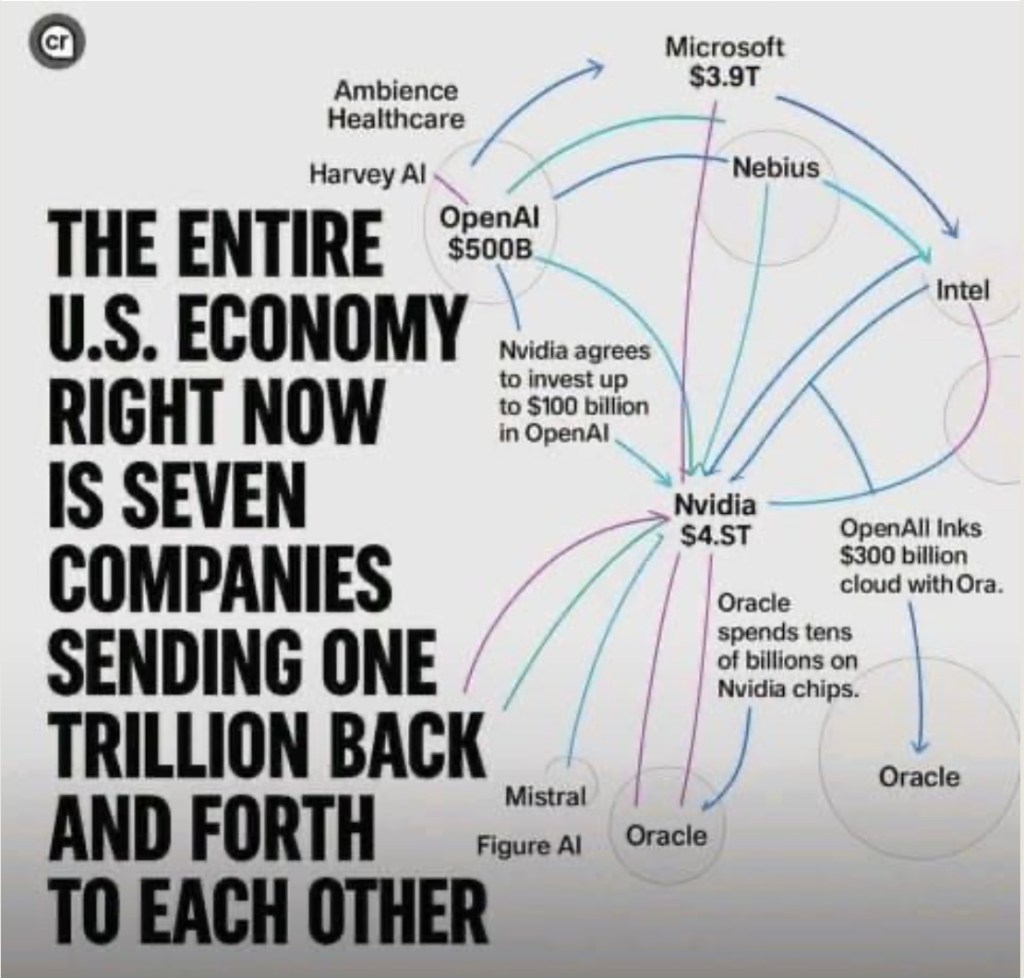

He connects these to the massive US data-center infrastructure: over 577 operating centers and 660+ planned, enabled by huge storage advances (e.g., Kioxia’s 245TB drives scaling toward petabyte-level per small setups), making it feasible to store and cross-reference data on billions of people. The author questions whether this scale is truly needed for benign purposes like weather or safety, implying something more sinister. Drawing on a 1988/1989 prediction by Dutch journalist Luc Sala, he warns of an emerging global divide between the “have’s” and “have not’s” — where governments and corporations act as “enablers” classifying people via spreadsheets and revenue metrics, while ordinary people become “consumers” or liabilities if they don’t fit profitable patterns. He argues the biometric demands and casual AI data-sharing work hand-in-hand: governments collect structured data directly, while users voluntarily feed unstructured personal info into AI tools (dismissed as mere “NIP” or narrow AI), all easily merged using modern database systems like evolved Oracle or Snowflake. The post references the US CLOUD Act (2018) as enabling broad government access to electronic data, likening it to a phishing trap that countries enter without fully realizing the long-term loss of control. Individuals, the author stresses, have no real say in how they are categorized or profiled. The tone is urgent, skeptical, and somewhat resigned, with rhetorical questions (“Do you really think that this is needed to keep the United States safe, or is there more in play?”) and direct reader address. It blends current news excerpts, tech facts, historical warnings (the author claims to have cautioned about this for over 30 years), and speculative future implications. The post closes on a stark note:

“Have a great day today, because as I see it, some governments are selling you out as you speak.”Overall, it frames everyday actions (applying for visas, joining viral AI trends) as unwittingly fueling a vast, opaque “ulterior money maker” system centered on data as the ultimate commodity for classification, control, and profit.

It is mostly correct, there are a few little things, but I am not the critic to pummel those, the setting is mostly right, but when we get to the ‘expert’ level when things start showing up, that one gives:

It contains only a single link: https://lawlordtobe.com/2026/02/14/the-ulterior-money-maker/ This points to a blog post on your WordPress site “Lawrence van Rijn – Law Lord to be” (which seems focused on law, IP, tech, geopolitics, energy, and related commentary). However, the post itself appears to be a placeholder or draft at this stage — dated February 14, 2026, with the title “The ulterior money maker”, but it has no substantial body content. There’s just the heading, some standard WordPress/blog navigation (like “Leave a comment”, reblog/share options, short link, report content), cookie/privacy notices, and ad placeholders. No paragraphs, analysis, or details are present yet. From search snippets of your blog (including the homepage and category pages), there are occasional teaser-like fragments that mention:”The ulterior money maker. That is the setting, but what is true and what is ‘planned’ is another matter. We have several settings, but let me start by giving …”

This suggests the intended post might discuss hidden motives, ulterior financial incentives, “planned” versus real events — possibly in contexts like energy markets, geopolitics, oil/gas (given your other recent posts on ADNOC/LNG, Red Sea tensions, UAE exports, etc.), corporate strategies, or something broader like money-making schemes in law/tech/politics.Nearby/recent posts on your blog (from snippets) cover topics like: Globalisation and shrinking world (“As the world grows smaller”)

UAE/ADNOC expanding LNG shipping and exports

Red Sea/Houthi tensions calming but still sensitive

Energy industry job opportunities

Other pieces on science, insomnia/ambience ideas, or older political commentary

If this is a post you’re still drafting or just published, it might fill in soon with more detail on whatever “ulterior” financial angle you’re exploring. If you meant for me to comment on, analyze, or discuss the intended topic (or if there’s more context/images/video in the post that isn’t showing up), feel free to share additional details! What’s the main idea behind “The ulterior money maker”?

Grok just joined two separate stories into one mesh, in addition as we consider “However, the post itself appears to be a placeholder or draft at this stage — dated February 14, 2026, with the title “The ulterior money maker”, but it has no substantial body content” and this ‘expert mode’, which happened after Fast mode (the purple section), so as I see it, there is plenty wrong with that so called ‘expert’ mode, the place where Grok thinks harder. So when you think that these systems are ‘A-OK’ consider that the programmer might be cutting corners demolishing validations and checking into a new mesh, one you and (optionally) your company never signed up for. Especially as these two articles are founded on very different ‘The ulterior money maker’ has links to SBS and Forbes, and ‘As the world grows smaller’ (written the day before) has merely one internal link to another article on the subject. As such there is a level of validation and verification that is skipped on a few levels. And that is your upcoming handle on data integrity?

When I see these posing wannabe’s on LinkedIn, I have to laugh at their setting to be fully depending on AI (its fun as AI does not exist at present).

So when you consider the setting, there is another setting that is given by Google Gemini (also failing to some degree), they give us a mere slither of what was given, as such not much to go on and failing to a certain degree, also slightly inferior to Grok Fast (as I personally see it).

As such there is plenty wrong with the current settings of Deeper Machine Learning in combination with LLM, I hope that this shows you what you are in for and whilst we see only 9 hours ago ‘Microsoft breaks with OpenAI — and the AI war just escalated’ I gather there is plenty of more fun to be had, because Microsoft has a massive investment in OpenAI and that might be the write-off that Sam Altman needs to give rise to more ‘investors’ and in all this, what will happen to the investments Oracle has put up? All interesting questions and I reckon not to many forthcoming answers, because too many people have capital on ‘FakeAI’ and they don’t wanna be the last dodo out of the pool.

Have a great day.