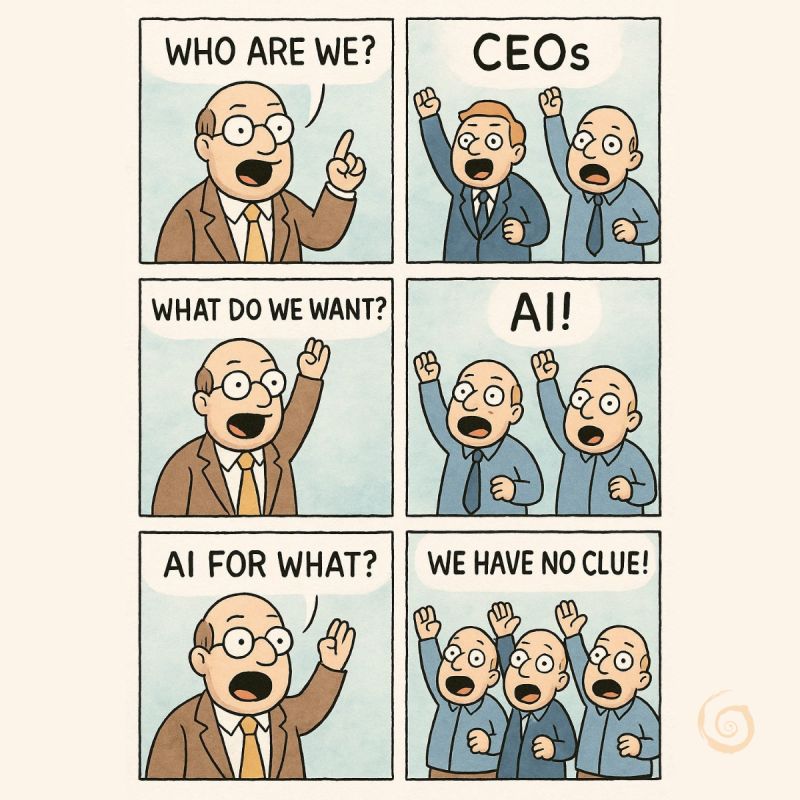

There was a game in the late 80’s, I played it on the CBM64. It was called bubble bobble. There was a cute little dragon (the player) and it was the game to pop as many bubbles as you can. So, fast forward to today. There were a few news messages. The first one is ‘OpenAI’s $1 Trillion IPO’ (at https://247wallst.com/investing/2025/10/30/openais-1-trillion-ipo/) which I actually saw last of the three. We see ridiculous amounts of money pass by. We are given ‘OpenAI valuation hits $762b after new deal with Microsoft’ with “The deal refashions the $US500 billion ($758 billion) company as a public benefit corporation that is controlled by a nonprofit with a stake in OpenAI’s financial success.” We see all kinds of ‘news’ articles giving these players more and more money. Its like watching a bad hand of Texas Hold’em where everyone is in it with all they have. As the information goes, it is part of the sacking of 14,000 employees by Amazon. And they will not see the dangers they are putting the population in. This is not merely speculation, or presumption. It is the deadly serious danger of bobbles bursting and we are unwittingly the dragon popping them.

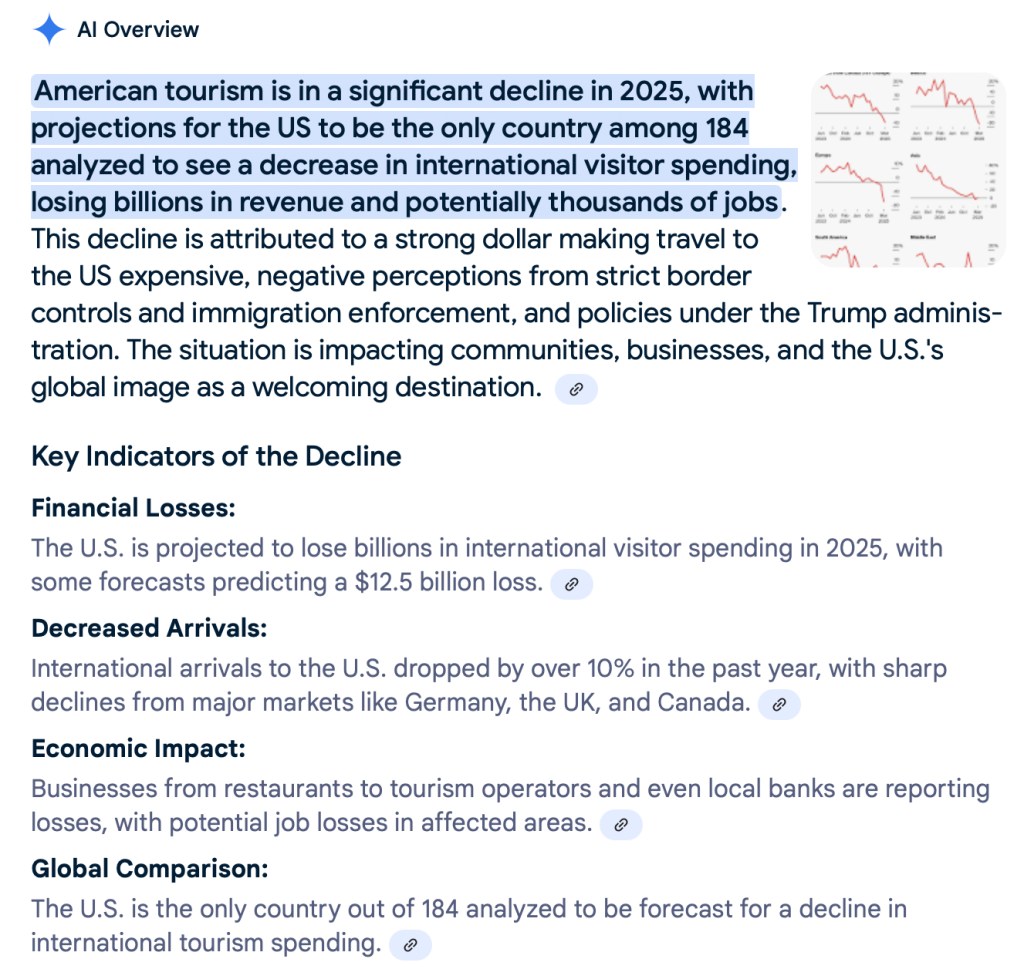

So the article gives us “If anyone needs proof that the AI-driven stock market is frothy, it is this $1 trillion figure. In the first half of the year, OpenAI lost $13.5 billion, on revenue of $4.3 billion. It is on track to lose $27 billion for the year. One estimate shows OpenAI will burn $115 billion by 2029. It may not make money until that year.” So as I see it, that is a valuation that is 4 years into the future with a market as liquid as it is? No one is looking at what Huawei is doing or if it can bolster their innovative streak, because when that happens we will get an immediate write-off no less then $6,000,000,000,000 and it will impact Microsoft (who now owns 27% of OpenAI) and OpenAI will bank on the western world to ‘bail’ them out, not realising that the actions of President Trump made that impossible and both the EU and Commonwealth are ready and willing to listen to Huawei and China. That is the dreaded undertow in this water.

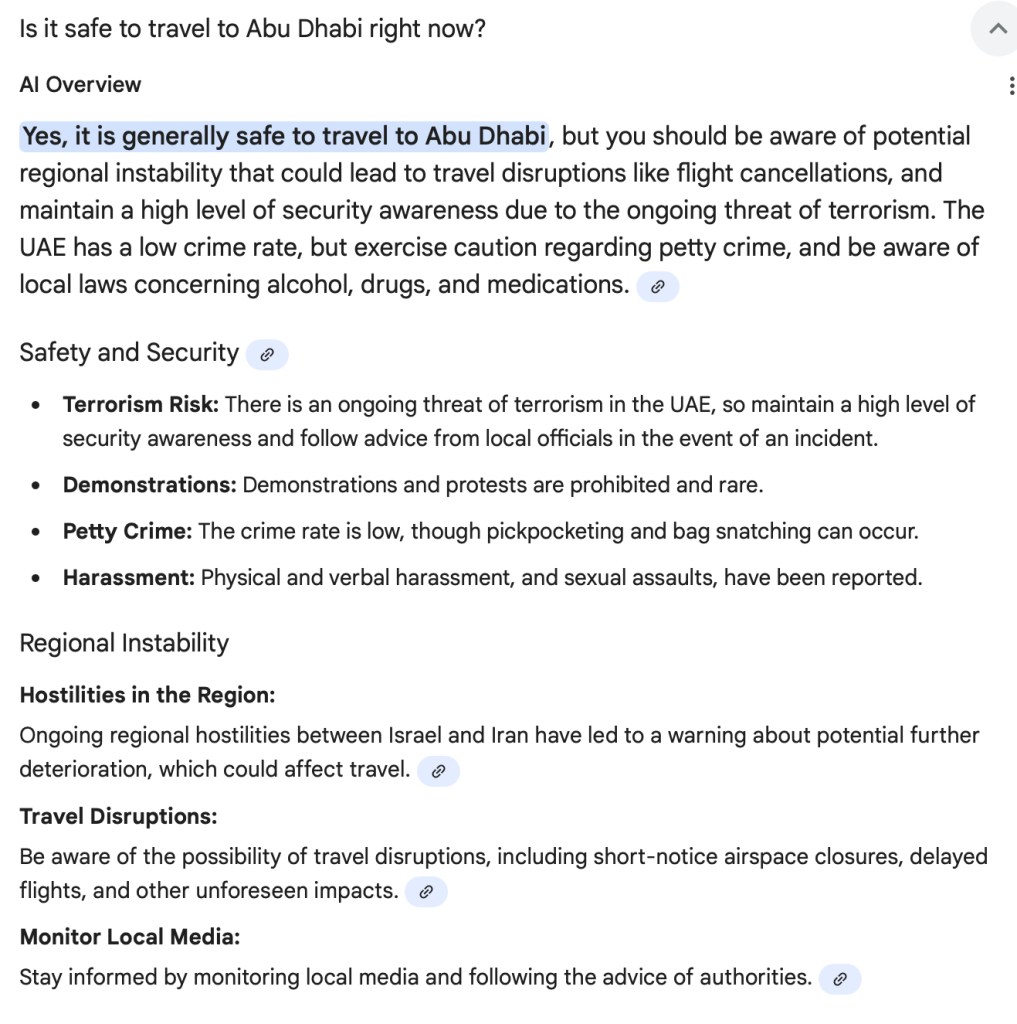

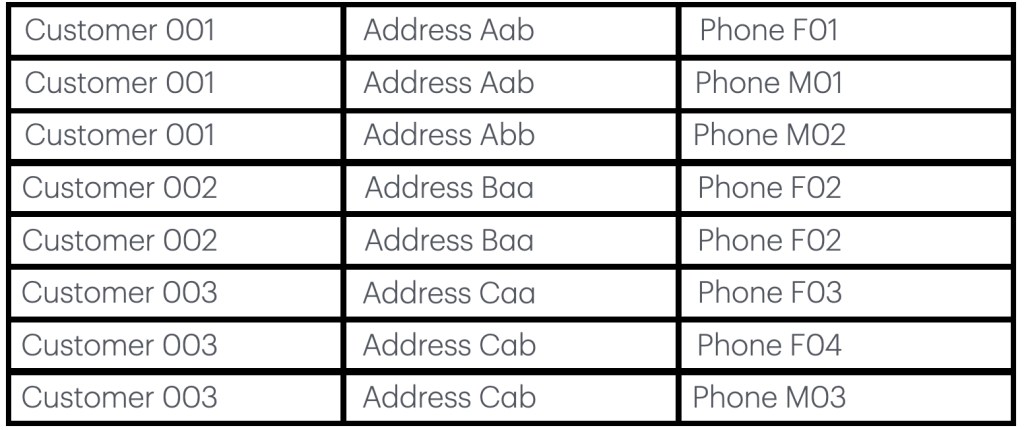

All whilst the BBC reports “Under the terms, Microsoft can now pursue artificial general intelligence – sometimes defined as AI that surpasses human intelligence – on its own or with other parties, the companies said. OpenAI also said it was convening an expert panel that will verify any declaration by the company that it has achieved artificial general intelligence. The company did not share who would serve on the panel when approached by the BBC.” And there are two issues already hiding under the shallows. The first is data value, you see data that cannot be verified or validated is useless and has no value and these AI chasers have been so involved into the settings of the so called hyped technology that everyone forgets that it requires data. I think that this is a big ‘Oopsy’ part in that equation. And the setting that we are given is that it is pushed into the background all whilst it needs to have a front and centre setting. You see, when the first few class cases are thrown into the brink, Lawyers will demand the algorithm and data settings and that will scuttle these bubbles like ships in the ocean and the turmoil of those waters will burst the bubbles and drown whomever is caught in that wake. And be certain that you realise that the lawyers on a global setting are at this moment gearing up for that first case, because it will give them billions in class actions and leave it to greed to cut this issue down to size. Microsoft and OpenAI will banter, cry and give them scapegoats for lunch, but they will be out and front and they will be cut to size. As will Google and optionally Amazon and IBM too. I already found a few issues in Googles setting (actors staged into a movie before they were born is my favourite one) and that is merely the tip of the iceberg, it will be bigger than the one sinking the Titanic and it is heading straight for the Good Ship Lollipop(AI) the spectacle will be quite a site and all the media will hurry to get their pound of beef and Microsoft will be massively exposed at the point (due to previous actions).

A setting that is going to hit everyone and the second setting is blatantly ignored by the media. You see, these data centers, How are they powered? As I see it, the Stargate program will require (my inaccurate multiple Gigabytes Watt setting) a massive amount of power. The people in West Virginia are already complaining on what there is and a multiple factor will be added all over the USA, the UAE and a few other places will see them coming and these power settings are blatantly short. The UAE is likely close to par and that sets the dangers of shortcomings. And what happens to any data center that doesn’t get enough power? Yup, you guessed it, it will go down in a hurry. So how is that fictive setting of AI dealing with this?

Then we get a new instance (at https://cyberpress.org/new-agent-aware-cloaking-technique-exploits-openai-chatgpt-atlas-browser-to-serve-fake-content/) we are given ‘New Agent-Aware Cloaking Technique Exploits OpenAI ChatGPT Atlas Browser to Serve Fake Content’ as I personally see it, I never considered that part, but in this day and age. The need to serve fake content is as important as anything and it serves the millions of trolls and the influencers in many ways and it degrades the data that is shown at the DML and LLM’s (aka NIP) in a hurry reducing dat credibility and other settings pretty much off the bat.

So what is being done about that? As we are given “The vulnerability, termed “agent-aware cloaking,” allows attackers to serve different webpage versions to AI crawlers like OpenAI’s Atlas, ChatGPT, and Perplexity while displaying legitimate content to regular users. This technique represents a significant evolution of traditional cloaking attacks, weaponizing the trust that AI systems place in web-retrieved data.” So where does the internet go after that? So far I have been able to get the goods with the Google Browser and it does a fine job, but even that setting comes under scrutiny until they set a parameter in their browser to only look at Google data, they are in danger of floating rubbish at any given corner.

A setting that is now out in the open and as we are ‘supposed’ to trust Microsoft and OpenAI, until 2029, we are handed an empty eggshell and I am in doubt of it all as too many players have ‘dissed’ Huawei and they are out there ready to show the world how it could be done. If they succeed that 1 trillion IPO is left in the dirt and we get another two years of Microsoft spin on how they can counter that, I put that in the same collection box where I put that when Microsoft allegedly had its own more powerful item that could counter Unreal Engine 5. That collection box is in the Kitchen and it is referred to as the Trashcan.

Yes, this bubble is going ‘bang’ without any noise because the vested interested partners need to get their money out before it is too late. And the rest? As I personally see it, the rest is screwed. Have a great day as the weekend started for me and it will star in 8 hours in Vancouver (but they can start happy hour inn about one hour), so they can start the weekend early. Have a great one and watch out for the bubbles out there.