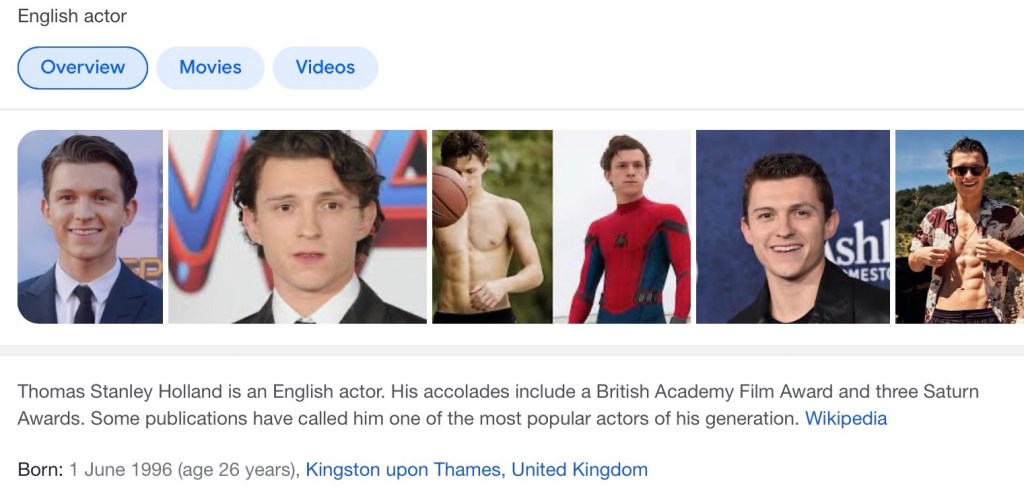

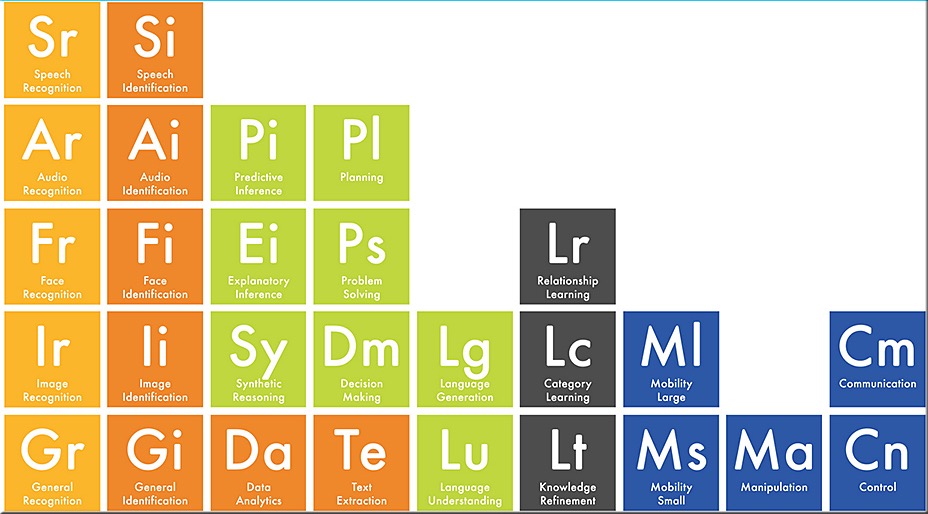

Yup, we are going there. It might not be correct, but that is where the evidence is leading us. You see I got hooked on the Rookie and watched seasons one through four in a week. Yet the name Eric Winter was bugging me and I did not know why. The reason was simple. He also starred in the PS4 game ‘Beyond two souls’ which I played in 2013. I liked that game and his name stuck somehow. Yet when I looked for his name I got

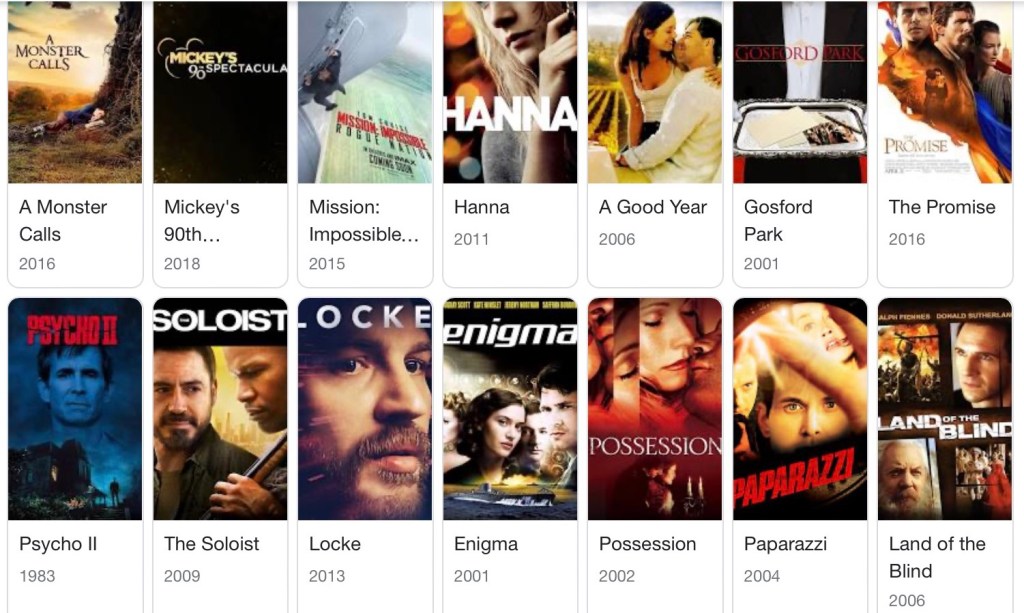

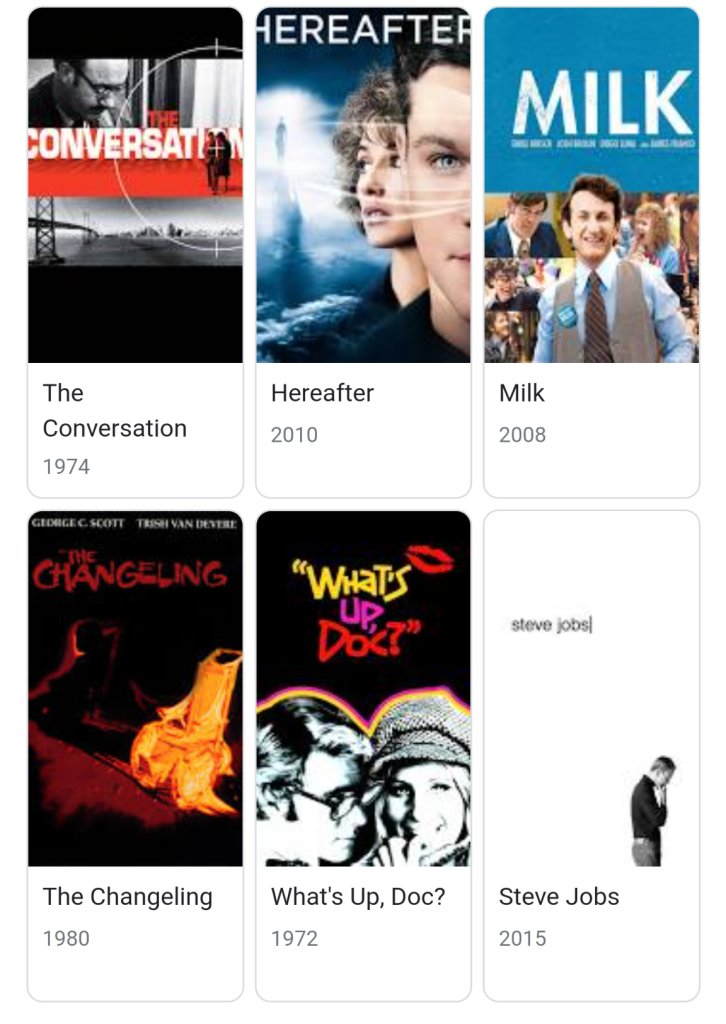

This got me curious, two of the movies I saw and Eric would have been too young to be in them and there is the evidence, presented by Google. Eric Winter born on July 17th 1976 played alongside Barbara Streisand 4 years before he was born, evidence of godhood.

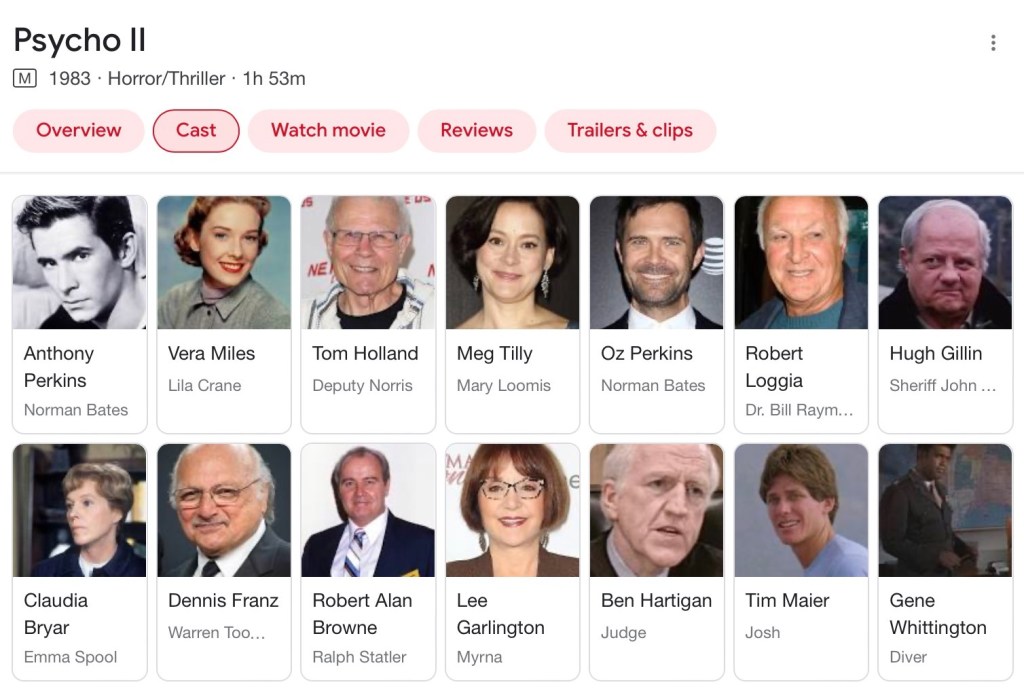

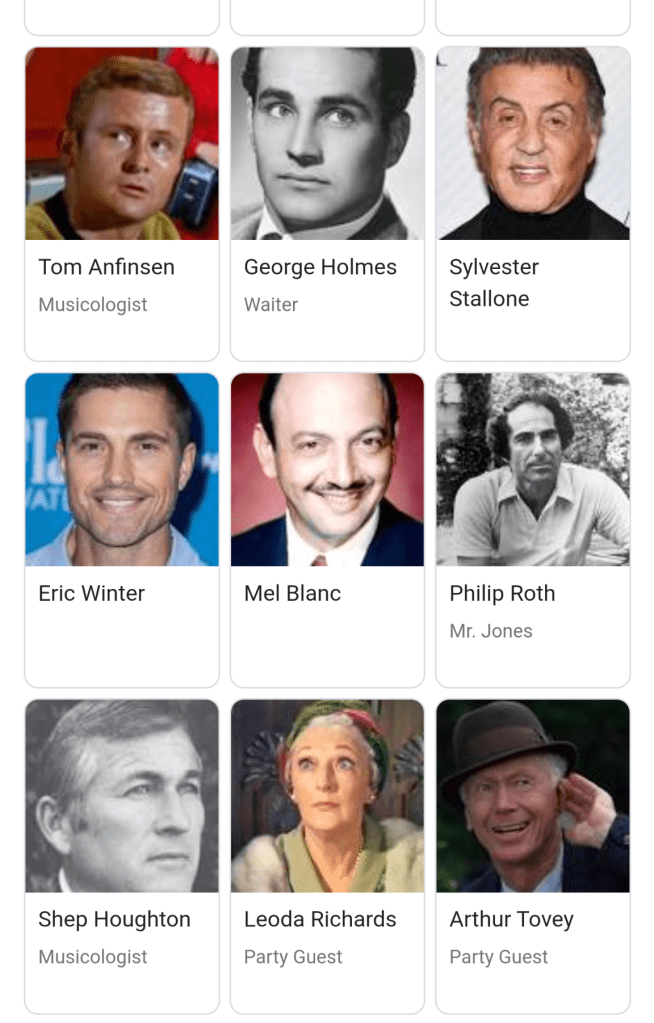

And when we look at the character list, there he is.

Yet when we look at a real movie reference like IMDB.com we will get

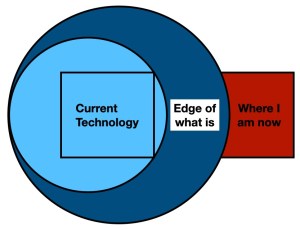

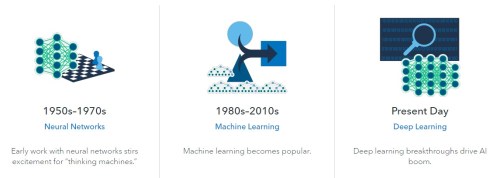

Yes, that is the real person who was in the movie. We can write this up as a simple error, but that is not the path we are trodding on. You see, people are all about AI and ChatGPT but the real part is that AI does not exist (not yet anyway). This is machine learning and deeper machine learning and this is prone to HUMAN error. If there is only 1% error and we are looking at about 500,000 movies made, that implies that the movie reference alone will contain 5,000 errors. Now consider this on data of al kinds and you might start to see the picture shape. When it comes to financial data and your advisor is not Sam Bankman-Fried, but Samual Brokeman-Fries (a fast-food employee), how secure are your funds then? To be honest, whenever I see some AI reference I got a little pissed off. AI does not exist and it was called into existence by salespeople too cheap and too lazy to do their job and explain Deeper Machine Learning to people (my view on the matter) and things do not end here. One source gives us “The primary problem is that while the answers that ChatGPT produces have a high rate of being incorrect, they typically look like they might be good and the answers are very easy to produce,” another source gives us issues with capacity, plagiarism and cheating, racism, sexism, and bias, as well as accuracy problems and the shady way it was trained. That is the kicker. An AI does not need to be trained and it would compare the actors date of birth with the release of the movie making The Changeling and What’s up Doc? falling into the net of inaccuracy. This is not happening and the people behind ChatGPT are happy to point at you for handing them inaccurate data, but that is the point of an AI and its shallow circuits to find the inaccuracies and determine the proper result (like a movie list without these two mentions).

And now we get the source Digital Trends (at https://www.digitaltrends.com/computing/the-6-biggest-problems-with-chatgpt-right-now/) who gave us “ChatGPT is based on a constantly learning algorithm that not only scrapes information from the internet but also gathers corrections based on user interaction. However, a Time investigative report uncovered that OpenAI utilised a team in Kenya in order to train the chatbot against disturbing content, including child sexual abuse, bestiality, murder, suicide, torture, self-harm, and incest. According to the report, OpenAI worked with the San Francisco firm, Sama, which outsourced the task to its four-person team in Kenya to label various content as offensive. For their efforts, the employees were paid $2 per hour.” I have done data cleaning for years and I can tell you that I cost a lot more then $2 per hour. Accuracy and cutting costs, give me one real stage where that actually worked? Now the error at Google was a funny one and you know in the stage of Melissa O’Neil a real Canadian telling Eric Winter that she had feelings for him (punking him in an awesome way). We can see that this is a simple error, but these are the errors that places like ChatGPT is facing too and as such the people employing systems like ChatGPT, which over time as Microsoft is staging this in Azure (it already seems to be), this stage will get you all in a massive amount of trouble. It might be speculative, but consider the evidence out there. Consider the errors that you face on a regular base and consider how high paid accountants mad marketeers lose their job for rounding errors. You really want to rely on a $2 per hour person to keep your data clean? For this merely look at the ABC article on June 9th 2023 where we were given ‘Lawyers in the United States blame ChatGPT for tricking them into citing fake court cases’. Accuracy anyone? Consider that against a court case that was fake, but in reality they were court cases that were actually invented by the artificial intelligence-powered chatbot.

In the end I liked my version better, Eric Winter is a god. Equally not as accurate as reality, but more easily swallowed by all who read it, it was the funny event that gets you through the week.

Have a fun day.