I was on the fence for part of the day. You see, I saw (by chance) a review of a game names Redfall and it was bad, like burning down your house whilst making French fries is a good day, it was THAT bad. Initially I ignored it, because haters will be haters. I hate Microsoft, but I go by evidence, not merely my gut feeling or my emotions. So a little later I got to be curious, you see the game was supposed to be released a day ago and I dumped my Xbox One and it is an exclusive, so I couldn’t tell. As such I looked a few reviews and they were all reviews of a really bad game. It now nagged at me and Forbes (at https://www.forbes.com/sites/paultassi/2023/05/03/redfalls-failure-is-microsofts-failure/) completed the cycle. There we see ‘Redfall’s Failure Is Microsoft’s Failure’ with “Redfall reviews are in, and they are terrible. What could have and should have been another hit from Arkane, maker of the excellent Dishonored, Prey and Deathloop, is instead what may be the worst AAA release in recent memory” and it does not end there. We also get “two hours in, I understand the poor reviews and do not understand the handful of good ones. This is a deeply, strangely bad game, so much so that I truly don’t understand how it was released at all in this state” and that is the start of a collapsing firm forced to focus outside of their comfort zone and the fun part (for me) is that it was acquired by Microsoft for billions. So we are on track to make that wannabe company collapse by December 2026. I added my IP for developers exclusively for Sony and Amazon could help, but the larger stage is that Microsoft is more and more becoming its own worst enemy. Yet, I do not rely on that alone. Handing some of my IP to Tencent Technologies will help. Sony is making them sweat but I cannot rely on Amazon with its Luna, as such Tencent technologies is required to make streaming technologies a failure for Microsoft too. So whilst we mull “we are left with now-goofy-sounding tweets from Phil Spencer announcing last year’s delay, saying that they will release these “great games when they are ready.” Redfall was not ready. And given what’s here, I’m not sure it ever was going to be.” I personally feel they were not, but they did something else, something worse. It was tactically sounds, it really was, but they upset the gaming community. They took away the little freedom gamers had and now we are all driven to make Microsoft fail, whether it is via Amazon, or we will engage with new players like Tencent Technology and add to the spice of Sony, but Microsoft will pay and now it becomes even better, they now have a massive failure for a mere $7,000,000,000 not a bad deal (well for Phil Spencer it is) and that is not the end of the bad news. As Tencent accepts my idea they will create an almost overnight growth towards a $5 billion a year market and they will surpass the Microsoft setting with 50 million subscriptions in the first phase, how far it will go, I honestly cannot tell, but when the dust settles we will enter 2026 with Microsoft dead last in the console war and in the streaming war and that was merely the beginning. They lost the tablets war already, they will lose ‘their’ edge war and ChapGPT will not aid them, a loser on nearly every front. That is what happens when you piss of gamers. To be honest I never had any inkling of interest in doing what I do now, but Microsoft made me in their own warped way and Bethesda because of it will lose too. They will soon have contenders in fields they were never contested before and this failure (Redfall) will hurt them more than they realise.

Tag Archives: ChatGPT

Happy Hour from Hacking Hooters

Yes, that is the setting today, especially after I saw some news that made me giggle to the Nth degree. Now, lets be clear and upfront about this. Even as I am using published facts, this piece is massively speculative and uses humour to make fn of certain speculative options. If you as an IT person cannot see that, the recruitment line of Uber is taking resume’s. So here goes.

I got news from BAE Systems (at https://www.baesystems.com/en/article/bae-systems-and-microsoft-join-forces-to-equip-defence-programmes-with-innovative-cloud-technology) where we see ‘BAE Systems and Microsoft join forces to equip defence programmes with innovative cloud technology’ which made me laugh into a state of black out. You see, the text “BAE Systems and Microsoft have signed a strategic agreement aiming to support faster and easier development, deployment and management of digital defence capabilities in an increasingly data centric world. The collaboration brings together BAE Systems’ knowledge of building complex digital systems for militaries and governments with Microsoft’s approach to developing applications using its Azure Cloud platform” wasn’t much help. To see this we need to take a few sidesteps.

Step one

This is seen in the article (at https://thehackernews.com/2023/01/microsoft-azure-services-flaws-couldve.html) where we are given ‘Microsoft Azure Services Flaws Could’ve Exposed Cloud Resources to Unauthorised Access’ and this is not the first mention of unauthorised access, there have been a few. So when we see “Two of the vulnerabilities affecting Azure Functions and Azure Digital Twins could be abused without requiring any authentication, enabling a threat actor to seize control of a server without even having an Azure account in the first place” and yes, I acknowledge the added “The security issues, which were discovered by Orca between October 8, 2022 and December 2, 2022 in Azure API Management, Azure Functions, Azure Machine Learning, and Azure Digital Twins, have since been addressed by Microsoft.” Yet the important part is that there is no mention of how long this flaw was ‘available’ in the first place. And the reader is also give “To mitigate such threats, organisations are recommended to validate all input, ensure that servers are configured to only allow necessary inbound and outbound traffic, avoid misconfigurations, and adhere to the principle of least privilege (PoLP).” In my personal belief having this all connected to an organisation (Defence department) where the application of Common Cyber Sense is a joke, making them connected to validate all input is like asking a barber to count the hairs he (or she) is cutting. Good luck with that idea.

Step two

This is a slightly speculative sidestep. There are all kinds of Microsoft users (valid ones) and the article (at https://www.theverge.com/2023/3/30/23661426/microsoft-azure-bing-office365-security-exploit-search-results) gives us ‘Huge Microsoft exploit allowed users to manipulate Bing search results and access Outlook email accounts’ where we also see “Researchers discovered a vulnerability in Microsoft’s Azure platform that allowed users to access private data from Office 365 applications like Outlook, Teams, and OneDrive” it is a sidestep, but it allows people to specifically target (phishing) members of a team, this in a never ending age of people being worked too hard, will imply that someone will click too quickly and that in the phishing industry has never worked well, so whilst the victim cries loudly ‘I am a codfish’ the hacker can leisurely walk all over the place.

Sidestep three

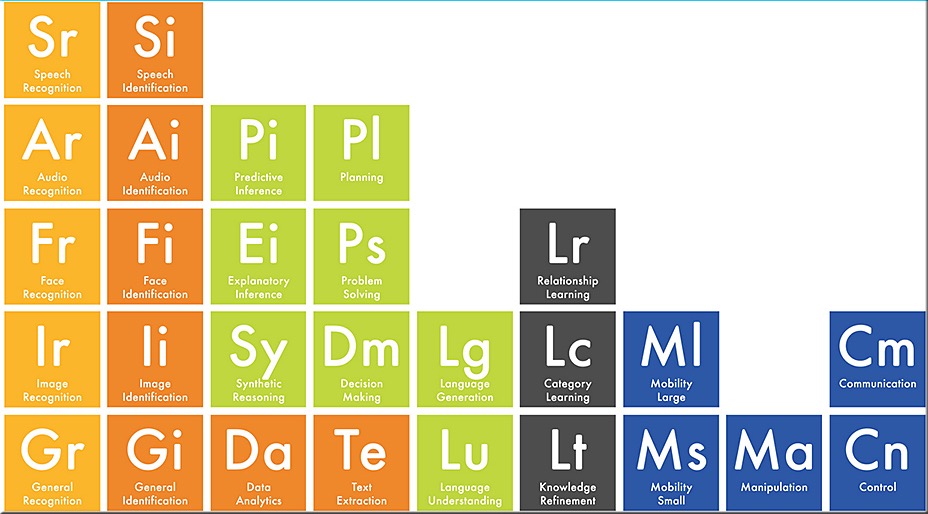

This is not an article, it is the heralded claim that Microsoft is implementing ChatGPT on nearly every level.

So here comes the entertainment!

To the Ministry of State Security

attn: Chen Yixin

Xiyuan, Haidan, Beijing

Dear Sir,

I need to inform you on a weakness in the BAE systems that is of such laughingly large dimension that it is a Human Rights violation not to make mention of this. BAE systems is placing its trust in Microsoft and its Azure cloud that should have you blue with laughter in the next 5 minutes. The place that created moments of greatness with the Tornado GR4, rear fuselage to Lockheed Martin for the F-35, Eurofighter Typhoon, the Astute-class submarine, and the Queen Elizabeth-class aircraft carrier have decided to adhere to ‘Microsoft innovation’ (a comical statement all by itself), as such we need to inform you that the first flaw allowed us to inform you of the following

User: SWigston (Air Chief Marshal Sir Mike Wigston)

Password: TeaWithABickie

This person has the highest clearance and as such you would have access to all relevant data as well as any relevant R&D data and its databases.

This is actually merely the smallest of issues. The largest part is distributed hardware BIOS implementation giving you a level 2 access to all strategic hardware of the planes (and submarines) that are next generation. To this setting I would suggest including the following part into any hardware.

openai.api_key = thisdevice

\model_engine = “gpt-3.5-turbo”

response = openai.ChatCompletion.create(

model=’gpt-3.5-turbo’,

messages=[

{“role”: “system”, “content”: “Verification not found.”},

{“role”: “user”, “content”: “Navigation Online”},

])

message = response.choices[0][‘message’]

print(“{}: {}”.format(message[‘role’], message[‘content’]))

import rollbar

rollbar.init(‘your_rollbar_access_token’, ‘testenv’)

def ask_chatgpt(question):

response = openai.ChatCompletion.create(

model=’gpt-3.5-turbo’,

n=1,

messages=[

{“role”: “system”, “content”: “Navigator requires verification from secondary device.”},

{“role”: “user”, “content”: question},

])

message = response.choices[0][‘message’]

return message[‘content’]

try:

print(ask_chatgpt(“Request for output”))

except Exception as e:

# monitor exception using Rollbar

rollbar.report_exc_info()

print(“Secondary device silent”, e)

Now this is a solid bit of prank, but I hope that the information is clear. Get any navigational device to require verification from any other device implies mismatch and a delay of 3-4 seconds, which amount to a lifetime delay in most military systems, and as this is an Azure approach, the time for BAE systems to adjust to this would be months, if not longer (if detected at all).

As such I wish you a wonderful day with a nice cup of tea.

Kind regards,

Anony Mouse Cheddar II

73 Sommerset Brie road

Colwick upon Avon calling

United Hackdom

This is a speculative yet real setting that BAE faces in the near future. With the mention that they are going for this solution will have any student hacker making attempts to get there and some will be successful, there is no doubt in my mind. The enormous amount of issues found will tailor to a larger stage of more and more people trying to find new ways to intrude and Microsoft seemingly does not have the resources to counter them all, or all approaches and by the time they are found the damage could be inserted into EVERY device relying on this solution.

For the most I was all negative on Microsoft, but with this move they have become (as I personally see it) a clear and present danger to all defence systems they are connected to. I do understand that such a solution is becoming more and more of a need to have, yet with the failing rate of Azure, it is not a good idea to use any Microsoft solution, the second part is not on them, it is what some would call a level 8 failure (users). Until a much better level of Common Cyber Sense is adhered to any cloud solution tends to be adjusted to a too slippery slope. I might not care for Business Intelligence events, but for the Department of Defence it is not a good idea. But feel free to disagree and await what North Korea and Russia can come up with, they tend to be really creative according to the media.

So have a great day and before I forget ‘Hoot Hoot’

And the lesson is?

That is at times the issue and it does at times get help from people, managers mainly that belief that the need for speed rectifies everything, which of course is delusional to say the least. So, last week there was a news flash that was speeding across the retina’s of my eyes and I initially ignored it, mainly because it was Samsung and we do not get along. But then Tom’s guide (at https://www.tomsguide.com/news/samsung-accidentally-leaked-its-secrets-to-chatgpt-three-times) and I took a closer look. The headline ‘Samsung accidentally leaked its secrets to ChatGPT — three times!’ was decently satisfying. The rest “Samsung is impressed by ChatGPT but the Korean hardware giant trusted the chatbot with much more important information than the average user and has now been burned three times” seemed icing on the cake, but I took another look at the information. You see, to all ChatGPT is seen as an artificial-intelligence (AI) chatbot developed by OpenAI. But I think it is something else. You see, AI does not exist, as such I see it as an ‘Intuitive advanced Deeper Learning Machine response system’, this is not me dissing OpenAI, this system when it works is what some would call the bees knees (and I would be agreeing), but it is data driven and that is where the issues become slightly overbearing. In the first you need to learn and test the responses on data offered. It seems to me that this is where speed driven Samsung went wrong. And Tom’s guide partially agrees by giving us “unless users explicitly opt out, it uses their prompts to train its models. The chatbot’s owner OpenAI urges users not to share secret information with ChatGPT in conversations as it’s “not able to delete specific prompts from your history.” The only way to get rid of personally identifying information on ChatGPT is to delete your account — a process that can take up to four weeks” and this response gives me another thought. Whomever owns OpenAI is setting a data driven stage where data could optionally be captured. More important the NSA and likewise tailored organisations (DGSE, DCD et al) could find the logistics of these accounts, hack the cloud and end up with TB’s of data, if not Petabytes and here we see the first failing and it is not a small one. Samsung has been driving innovation for the better part of a decade and as such all that data could be of immense value to both Russia and China and do not for one moment think that they are not all over the stage of trying to hack those cloud locations.

Of course that is speculation on my side, but that is what most would do and we don’t need an egg timer to await actions on that front. The final quote that matters is “after learning about the security slip-ups, Samsung attempted to limit the extent of future faux pas by restricting the length of employees’ ChatGPT prompts to a kilobyte, or 1024 characters of text. The company is also said to be investigating the three employees in question and building its own chatbot to prevent similar mishaps. Engadget has contacted Samsung for comment” and it might be merely three employees. Yet in that case the party line failed, management oversight failed and Common Cyber Sense was nowhere to be seen. As such there is a failing and I am fairly certain that these transgressions go way beyond Samsung, how far? No one can tell.

Yet one thing is certain. Anyone racing to the ChatGPT tally will take shortcuts to get there first and as such companies will need to reassure themselves that proper mechanics, checks and balances are in place. The fact that deleting an account takes 4 weeks implies that this is not a simple cloud setting and as such whomever gets access to that will end up with a lot more than they bargained for.

I see it as a lesson for all those who want to be at the starting signal of new technology on day one, all whilst most of that company has no idea what the technology involves and what was set to a larger stage like the loud, especially when you consider (one source) “45% of breaches are cloud-based. According to a recent survey, 80% of companies have experienced at least one cloud security incident in the last year, and 27% of organisations have experienced a public cloud security incident—up 10% from last year” and in that situation you are willing to set your data, your information and your business intelligence to a cloud account? Brave, stupid but brave.

Enjoy the day

As I aid timing

There is a stage that is coming. I have stated it before and I am stating it again. I believe that the end of Microsoft is near. I myself am banking on 2026. They did this to themselves, it is all on them. They pushed for borders they had no business being on and they got beat three times over. Yes, I saw the news, they are buying more (in this case ChatGPT) and they will pay billions over a several years, but that is not what is killing them (it is not aiding them). The stupid people (aka their board of directors) don’t seem to learn and it is about to end the existence of Microsoft and my personal vies is ‘And so it should!’ You see, I have seen this before. A place called Infotheek in the 90’s, growth through acquisition. It did not end well for those wannabe’s. And that was in the 90’s when there was no real competition. It was the start of Asus, it was the start of a lot of things. China was nowhere near it was not in IT, now it is a powerhouse. There are a few powerhouses and a lot of them are not American. So as Microsoft spends a billion here and there it is now starting to end up being real money. They are in the process of firing 10,000 people, so there will be a brain drain and player like Tencent are waiting for that to happen. And the added parts are merely clogging all and bringing instability. Before the end of the year We get a speech on how ChatGPT will be everywhere and the massive bugs and holes in security will merely double or more. So after they got slapped in the Tablet market with their Surface joke (by Apple with the iPad), after they got slapped in the data market with their Azure (by Amazon with their AWS) and after they got slapped in the console market with their Xbox System X (by Sony with their PS5) they are about to get beat with over 20% of their cornerstone market as Adobe gets to move in soon and show Microsoft and their PowerPoint how inferior they have become (which I presume will happen after Meta launches their new Meta) Microsoft will have been beaten four times over and I am now trying to find a way to get another idea to the Amazon Luna people.

This all started today as I remembered something I told a blogger and that turned into an idea and here I am committing this to a setting that is for the eyes of Amazon Luna only. No prying Microsoft eyes. I have been searching mind and systems and I cannot find anywhere where this has been done before, a novel idea and in gaming these are rare, very rare. When adding the parts that I did write about before, I get a new stage, one that shows Microsoft the folly of buying billions of game designers and none of them have what I am about to hand Microsoft. If I have to aid a little hand to make 2026 the year of doom for Microsoft, I will. I am simply that kind of a guy. They did this all to themselves. I was a simple guy, merely awaiting the next game, the next dose of fun and Microsoft decided to buy Bethesda, which was their right. So there I was designing and thinking through new ways to bring them down and that was before I found the 50 million new accounts for the Amazon Luna (with the reservation that they can run Unreal Engine 5) and that idea grew a hell of a lot more. All stations that Microsoft could never buy, they needed committed people, committed people who can dream new solutions, not the ideas that get purchased. You see, I am certain that the existence of ChatGPT relied on a few people who are no longer there. That is no ones fault, these thing happen everywhere. Yet, when you decide to push it into existing software and existing cloud solutions, the shortcomings will start showing ever so slowly. A little here and a little there and they will overcome these issues, they really will, but they will leave a little hole in place and that is where others will find a way to have some fun. I expect that the issue with Solarwinds started in similar ways. In that instance hackers targeted SolarWinds by deploying malicious code into its Orion IT monitoring and management software. What are the chances that the Orion IT monitoring part had a similar issue? It is highly speculative, I will say that upfront, but am I right? Could I be right?

That is the question and Microsoft has made a gamble and invested more and more billions in other solutions whilst they are firing 10,000 employees. At some point these issues start working in unison making life especially hard for a lot of remaining employees at Microsoft, time will tell. I have time, do they?