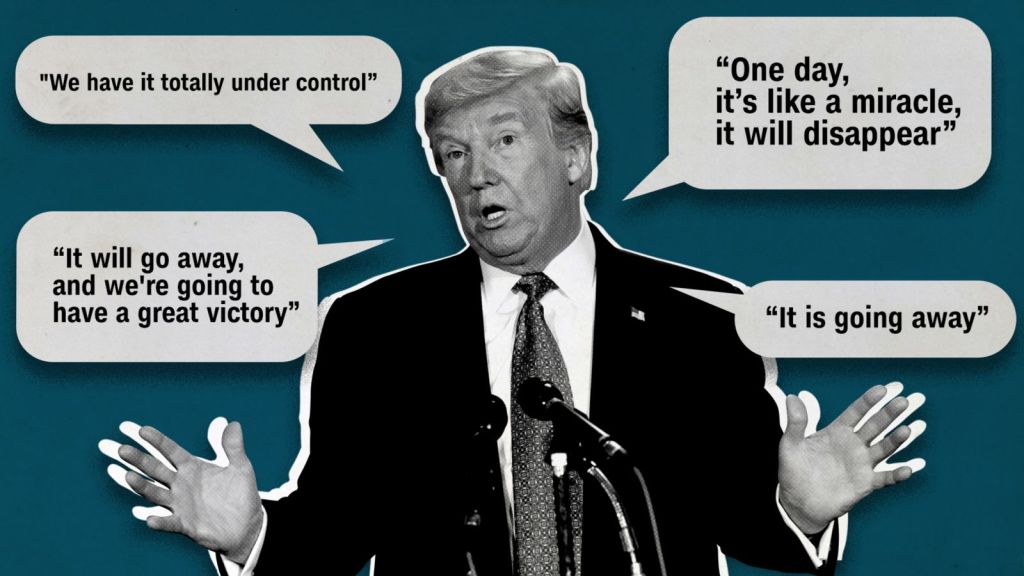

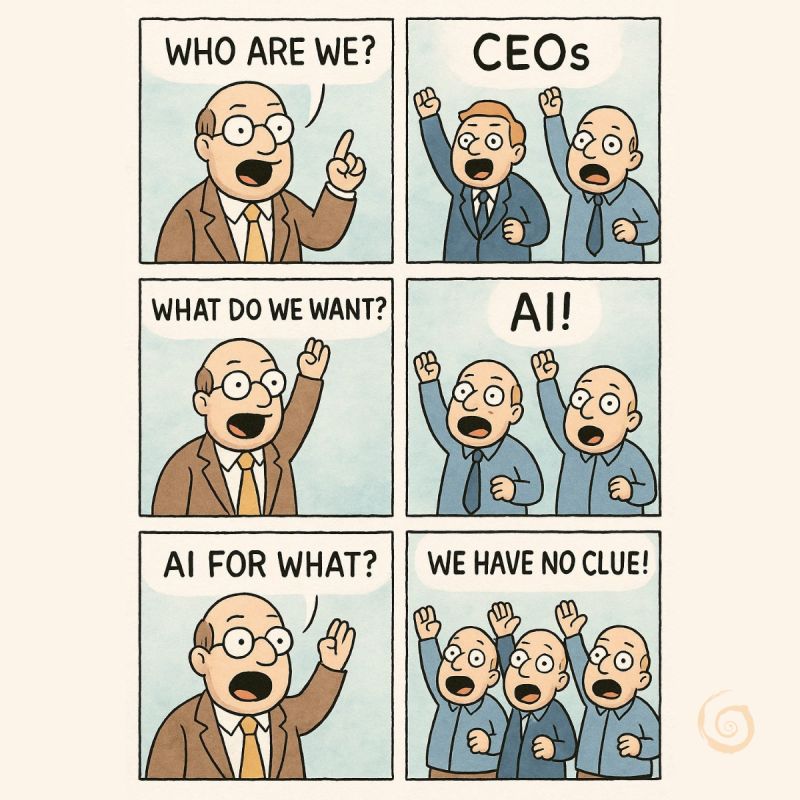

Perhaps you remember the 80’s series soap. Someone made a sitcom of the most hilarious settings and took it up a notch, the series was called soap and people loved it, it did nearly everything right, but over time this bubble went, just like all the other soap bubbles tend to go and that is OK, the made their mark and we felt fine. There is another bubble. It is not as good. There is the mortgage bubble, the housing bubble (they were not the same), the economy bubble and all these bubbles come with an aftermath. Now we see the AI bubble and I predicted this as early as January 29th of this year in ‘And the bubble said ‘Bang’’ (at https://lawlordtobe.com/2025/01/29/and-the-bubble-said-bang/) and my setting is that AI does not yet exist, as I saw it, for the most, it is the construct of lazy salespeople who couldn’t be bothered to do their work and created the AI ‘Fab’ and hauled it over to fit their needs. Let’s be clear. There is no AI and when I use it I know that ‘the best’ I am doing is avoid a long discussion about how great DML and LLM are, because they are and it is amazing. And as these settings are correctly used, it will create millions if not billions in revenue. I got the idea to overhaul the Amazon system and let them optionally create online panels that could bank them billions, which I did in ‘Under Conceptual Construction’ (at https://lawlordtobe.com/2025/10/10/under-conceptual-construction/) and ‘Prolonging the idea’ (at https://lawlordtobe.com/2025/10/12/prolonging-the-idea/) which I wrote yesterday (almost 16 hours ago). I also gave light to an amazing lost and found idea which would cater to the needs of Airports and bus terminals. I saw that presentation and it was an amazing setting in what I still call NIP (Near Intelligent Parsing) in ‘That one idea’ (at https://lawlordtobe.com/2025/09/26/that-one-idea/) these are mere settings and they could be market changes. This is the proper use of IT to the next setting of automation. But the underlying bubble still exists, I merely don’t feed that beast, so when the BBC last night gave us all ‘‘It’s going to be really bad’: Fears over AI bubble bursting grow in Silicon Valley’ almost 2 days ago (at https://www.bbc.com/news/articles/cz69qy760weo) I saw the sparkly setting of soap bubbles erupt and I thought ‘That did not take long’. My setting was that AI (the real AI as Alan Turing saw it) was not ready yet. The small setting that at least three parts in IT did not yet exist. There is the true power of Quantum computing and as I see it quantum computers are real, but they are in the early stages of development and are not yet as powerful as future versions should be and for that, so as IBM rolls out their second system on the IBM Heron platform, we are getting there. It is called the IBM’s 156-qubit IBM Quantum Heron, just don’t get your hopes up, not too many can afford that platform. IBM keels it modes and gives us that “The computer, called Starling, is set to launch by 2029. The quantum computer will reside in IBM’s new quantum data center in upstate New York and is expected to perform 20,000 more operations than today’s quantum computers” I am not holding me credit card to account to that beauty. If at all possible, the only two people on the planet that can afford that setting are Elon Musk and Larry Ellison and Larry might buy it to see Oracle power at actual quantum speed and he will do it, to see quantum speed came to him in his lifetime. The man is 81 after all (so, he is no longer a teenager), If I had that kind of money (250,000 million) I would do it to, just so to see what this world has achieved. But the article (the BBC one) gives us ““I know it’s tempting to write the bubble story,” Mr Altman told me as he sat flanked by his top lieutenants. “In fact, there are many parts of AI that I think are kind of bubbly right now.”

In Silicon Valley, the debate over whether AI companies are overvalued has taken on a new urgency. Skeptics are privately – and some now publicly – asking whether the rapid rise in the value of AI tech companies may be, at least in part, the result of what they call “financial engineering”.” And the BBC is not wrong, we had a write-off in January of a trillion dollars and a few days ago another one of 1.5 trillion dollars. I would be willing to call that ‘Financial Engineering’ and that rapid rise? Call it the greedy need of salespeople getting their audience in a frenzy

I merely gave a few examples of what DML and LLM could achieve and getting a lost and found department set from weeks into minutes is quite the achievement and I reckon that places like JFK, Heathrow and Dubai Airport would jump at the chance to arrange a better lost and found department and they are not alone but one has to wonder how the market can write off trillions in merely two events. So when we get to

“In recent days, warnings of an AI bubble have come from the Bank of England, the International Monetary Fund, as well as JP Morgan boss Jamie Dimon who told the BBC “the level of uncertainty should be higher in most people’s minds”. And here, in what is often considered the tech capital of the world, concerns are growing. At a panel discussion at Silicon Valley’s Computer History Museum this week, early AI entrepreneur Jerry Kaplan told a packed audience he has lived through four bubbles. He’s especially concerned now given the magnitude of money on the table as compared to the dot-com boom. There’s so much more to lose. “When [the bubble] breaks, it’s going to be really bad, and not just for people in AI,” he said.”

He is not wrong. Consider the next one amounting to a speculated two trillion (or $2,000,000,000,000) when it hits, it could wipe out retirement savings of nearly everyone for years. So how do you feel about your retirement being written off for decades? When you are 80+ and you have millions upon millions you are just fine and that is merely 2-5 people, the other 8,200,000,000 people? The young will be fine, and over 4 billion will be too young to care about their retirement, but the rest? Good luck I say.

So what will happen to Stargate ($500B) when that bubble goes? I already see it as a failure as the required power settings will not be able to fuel this, apart from the need of hundreds of validators and their systems require power too, then we see Microsoft thinking (and telling us) it is the next big thing, all whilst basic settings aren’t out yet. Did anyone see the need for Shallow Circuits? Or the applied versions of Leon Lederman? No one realizes that he held the foundational setting of AI in Quantum computing. You see (as I personally see it) AI cannot really work in Binary technology, it requires a trinary setting, a simple stage of True, False and Both. It would allow for trinary settings, because it isn’t always True or False, we learn that the hard way, but in IT we accept it. That setting will come to blow when we get to the real AI part of it and that is why I (in part) the AI coffee being served in all places. And I like my sarcasm really hot (with two raw sugar and full cream milk)

That is the setting we face and whilst some will call the BBC article ‘doom speak’ I see it for what it is, a reminder that the AI frenzy is sales driven and whilst people are eager to forget the simplest setting, the real deal of Microsoft and Builder.AI is simply the setting that at present we are confronted with IT engineers making the decisions for us and the amount of class actions coming to the world in 2027 and 2028 (optionally as early as 2026) and as some cases are drawn out even yesterday (see https://authorsguild.org/news/ai-class-action-lawsuits/ for details) you need to realise that this bubble was orchestrated and as such I like the term ‘Financial Engineering’ so be good and use the NIP setting properly and feel free to be creative, I was and gave Amazon an idea that could bank it billions. But not all ideas are golden and I am willing to see that I am not the carrier of golden ideas, the fact that someone saw the Lost and Found setting is proof of that.

Have a great day, I am 30 minutes from breakfast now, so off I go to brekkyville.